Introduction to OpenAI Realtime Voice API

The OpenAI Realtime Voice API is redefining how developers create interactive, voice-driven applications. By leveraging cutting-edge models like GPT-4o, this API enables true real-time, low-latency conversational AI, bridging the gap between human and machine dialogue. As users increasingly expect natural, immediate voice interactions—whether in virtual assistants, customer support, or accessibility tools—realtime voice APIs have become essential.

OpenAI’s latest multimodal models support not just text and images, but high-fidelity, bidirectional audio. With the OpenAI Realtime Voice API, developers can build applications that interpret, respond, and even perform actions based on live speech. In this guide, we’ll explore the architecture, capabilities, setup, implementation, and best practices for using the OpenAI Realtime Voice API to power next-generation voice experiences.

What is the OpenAI Realtime Voice API?

The OpenAI Realtime Voice API is a WebSocket-based interface for streaming audio to and from advanced GPT-4o family models. Unlike traditional speech-to-text (STT) and text-to-speech (TTS) solutions that require serial processing, this API offers end-to-end, native speech-to-speech interaction. Key features include:

- Native speech-to-speech processing using OpenAI’s latest models

- Persistent, low-latency connections via WebSockets

- Multimodal input/output: audio, text, function calls, and more

- Steerable, emotionally nuanced voices

- Support for function calling and tool use

Supported Models:

- GPT-4o-family (standard and mini-realtime-preview variants)

- Future models announced by OpenAI

How is it different from STT+TTS Pipelines?

Traditional voice solutions transcribe audio to text, process the text, then synthesize a reply. The OpenAI Realtime Voice API processes audio natively, enabling near-instantaneous, more natural dialogue.

WebSocket Streaming:

The API establishes a persistent WebSocket connection for continuous bidirectional audio and data flow, minimizing latency and maximizing conversational fluidity.

Core Capabilities and Benefits of OpenAI Realtime Voice API

The OpenAI Realtime Voice API unlocks a new paradigm for conversational AI:

- Native Speech-to-Speech: Speak to the API and receive real-time, synthesized voice replies—no manual STT or TTS required.

- Low Latency: WebSocket streaming and model optimizations ensure sub-second response times, critical for natural conversations.

- Multimodal Support: Handle text, audio, function calls, and more in a single session, enabling rich, context-aware interactions.

- Steerable, Expressive Voices: Adjust tone, emotion, and style dynamically for more engaging, human-like responses.

- Practical Applications: Use cases include AI-powered customer support, virtual assistants, voice bots, accessibility solutions, and language learning tools. The advanced voice mode makes these interactions seamless and highly usable in production environments.

Setting Up the OpenAI Realtime Voice API

Prerequisites

To access the OpenAI Realtime Voice API, ensure you have:

- An active OpenAI (or Azure OpenAI) paid account

- Sufficient API quota and billing setup

- Regional availability (check the OpenAI documentation for current supported regions)

- API keys or Azure authentication credentials

Account Setup and Authentication

- Sign up or log in to your OpenAI or Azure account.

- Generate an API key from the OpenAI dashboard or Azure AI portal.

- For Azure, deploy the GPT-4o model via the AI Foundry or resource deployment interface.

Deployment and Model Selection

- Use the OpenAI portal or Azure AI Foundry to deploy GPT-4o or mini-realtime-preview models.

- Confirm your region supports realtime audio streaming.

- Configure endpoint URLs and authentication tokens as needed.

Quickstart Example (Node.js + WebSocket)

Here’s a basic Node.js example to connect and stream audio to the OpenAI Realtime Voice API:

1const WebSocket = require('ws');

2const fs = require('fs');

3

4const ws = new WebSocket('wss://api.openai.com/v1/audio/stream', {

5 headers: {

6 'Authorization': 'Bearer YOUR_OPENAI_API_KEY',

7 'Content-Type': 'application/json'

8 }

9});

10

11ws.on('open', function open() {

12 // Stream raw PCM16 audio chunks from microphone/file

13 const stream = fs.createReadStream('audio_sample.pcm');

14 stream.on('data', chunk => ws.send(chunk));

15});

16

17ws.on('message', function incoming(data) {

18 // Handle incoming audio or JSON messages

19 console.log('Received:', data);

20});

21

22ws.on('close', () => console.log('Connection closed'));

23Implementing Real-Time Voice Interactions with OpenAI Realtime Voice API

Streaming Audio to the API

To enable real-time conversations, you need to capture microphone input and transmit it to the API as a live stream.

- Microphone Input: Use Web APIs (

navigator.mediaDevices.getUserMedia) in browsers or libraries likepyaudioin Python. - Audio Encoding: The API typically expects PCM16 (16kHz, mono) or G711_ulaw encodings. Convert raw audio as needed before streaming.

Handling Responses

The API returns streaming responses that may include audio, text, or structured data. Playback involves decoding the audio chunks and outputting them via speakers or a browser audio context.

Streaming Audio Example (Node.js)

1const Speaker = require('speaker');

2const ws = new WebSocket('wss://api.openai.com/v1/audio/stream', { /* ... */ });

3

4ws.on('message', function incoming(data) {

5 // Write received PCM16 buffer to speaker

6 speaker.write(data);

7});

8Streaming Audio Example (Python)

1import websocket

2import pyaudio

3

4def on_message(ws, message):

5 # Play received PCM16 audio chunk

6 stream.write(message)

7

8ws = websocket.WebSocketApp('wss://api.openai.com/v1/audio/stream',

9 on_message=on_message,

10 header={'Authorization': 'Bearer YOUR_OPENAI_API_KEY'})

11

12# Set up PyAudio stream for playback

13p = pyaudio.PyAudio()

14stream = p.open(format=pyaudio.paInt16, channels=1, rate=16000, output=True)

15ws.run_forever()

16Integrating with WebRTC and Telephony

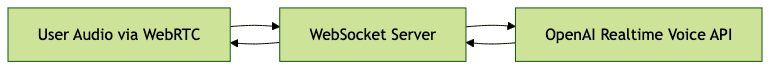

WebRTC: For peer-to-peer audio (e.g., browser-to-browser or browser-to-server), leverage WebRTC to capture and transmit audio streams directly to the API. WebRTC provides low-latency, secure audio channels suitable for real-time applications.

Twilio Integration Example: Many developers combine Twilio Media Streams with the OpenAI Realtime Voice API for telephony and IVR use cases. Here’s a simplified Python FastAPI endpoint:

1from fastapi import FastAPI, WebSocket

2from twilio.twiml.voice_response import VoiceResponse, Start, Stream

3

4app = FastAPI()

5

6@app.post('/twilio/voice')

7def twilio_voice():

8 response = VoiceResponse()

9 start = Start()

10 start.stream(url='wss://your-server.example.com/openai-audio-stream')

11 response.append(start)

12 return str(response)

13Mermaid Diagram: WebRTC + OpenAI Realtime Voice API Data Flow

Advanced Features: Function Calling and Tooling in OpenAI Realtime Voice API

One of the most powerful aspects of the OpenAI Realtime Voice API is the ability to call external functions and tools directly from voice input. For instance, a user can ask about the weather, and the API can invoke a weather API and respond with synthesized speech.

Example: Function Calling

1const message = {

2 "audio": /* PCM16 chunk */,

3 "tools": [

4 {

5 "name": "get_weather",

6 "parameters": { "location": "San Francisco" }

7 }

8 ]

9};

10ws.send(JSON.stringify(message));

11This extensibility allows you to build voice agents that can perform dynamic, context-aware tasks beyond simple Q&A, integrating with databases, APIs, or custom business logic.

Limitations, Challenges, and Best Practices for OpenAI Realtime Voice API

While the OpenAI Realtime Voice API is groundbreaking, it comes with practical considerations:

- Latency & Voice Quality: Although latency is low, it depends on network conditions and regional server proximity. Voice quality is generally high but may vary with encoding and model version.

- Regional Differences: Not all regions or cloud providers support the same models or features. Always check the latest region/model matrix.

- Cost & Billing: Pricing for the realtime API can be higher than standard text APIs, especially for long calls or heavy usage. Monitor usage and set appropriate quotas.

- Audio Input Limitations: The API leverages VAD (Voice Activity Detection) to segment input. Long silences or noisy environments may affect accuracy.

- Best Practices:

- Use VAD client-side to trim silences

- Compress audio streams where possible

- Handle authentication errors and timeouts gracefully

- Monitor and log latency for quality assurance

- Test with diverse voices and environments

Use Cases and Real-World Examples for OpenAI Realtime Voice API

- Customer Support Bots: Automate inbound call handling and provide instant, natural responses.

- Language Learning Assistants: Enable interactive speaking exercises and pronunciation feedback.

- Accessibility Tools: Assist visually impaired users with voice navigation and information retrieval.

- Real-Time Translation: Build voice-to-voice translation apps for global communication.

Conclusion and Future Directions

The OpenAI Realtime Voice API represents a leap forward for conversational AI, enabling true real-time, multimodal, and expressive human-computer interaction. While there are still limits—such as latency, voice range, and cost—the opportunities for innovation in voice-first applications are immense. As OpenAI continues to improve model voice quality, add emotional nuance, and refine pricing, now is the perfect time to experiment and build with the OpenAI Realtime Voice API.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ