Introduction to OpenAI API Stream

In the rapidly evolving landscape of artificial intelligence, delivering real-time experiences has become a necessity for many applications—from chatbots and virtual assistants to live translation engines and educational tools. The OpenAI API stream feature is a game-changer for developers aiming to serve dynamic, low-latency AI interactions. Unlike traditional API calls that return results only after processing is complete, streaming responses enable your applications to receive and render AI-generated data token-by-token, unlocking true real-time data capabilities.

Whether you’re working with the OpenAI Python SDK, integrating with FastAPI for robust backend services, or implementing streaming endpoints in Node.js, understanding how to leverage the OpenAI API stream is essential. This guide will walk you through the core concepts, implementation details, and developer best practices for building high-performance, scalable, and reliable AI-powered applications using OpenAI’s streaming architecture.

Understanding Streaming in OpenAI API

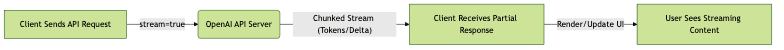

Streaming is the process of sending data incrementally from the server to the client as soon as it’s available, rather than waiting for the entire response to be generated. In the context of the OpenAI API, enabling streaming allows your application to display generated text as it’s produced, significantly improving user experience and perceived speed.

When you activate the

stream=true parameter, OpenAI’s API delivers a sequence of chunked responses, each containing a portion of the model’s output (such as a token or sentence fragment). Alongside the text, each chunk includes metadata fields like delta (the change/content) and finish_reason (why the stream ended), essential for controlling flow and handling completions.Benefits over standard responses:

- Faster perceived response times

- Enhanced user engagement through real-time feedback

- Lower latency for interactive applications

Client-Server Streaming Flow:

Setting Up for OpenAI API Stream

Before you can harness the power of the OpenAI API stream, ensure you have the following in place:

- API Key: Obtainable from your

OpenAI dashboard

- OpenAI Python SDK: Installable via pip (

pip install openai) - Compatible Environment: Python 3.7+, Node.js 18+, or equivalent

Supported Models and Endpoints:

Streaming is available on most chat/completion endpoints, including GPT-4, GPT-4o, and the o-series models. Always verify model compatibility in the

OpenAI API documentation

.Key Parameters:

stream: Set totrueto enable streamingmodel: Specify the compatible model (e.g.,gpt-4o)- Other parameters as needed (messages, temperature, etc.)

Basic Python Setup:

1import openai

2

3openai.api_key = "sk-..." # Replace with your actual OpenAI API key

4

5response = openai.ChatCompletion.create(

6 model="gpt-4o",

7 messages=[{"role": "user", "content": "Say hello!"}],

8 stream=True

9)

10The above snippet initializes the OpenAI client and triggers a chat completion request with streaming enabled. You’ll learn how to handle the streamed responses in the next section.

Handling Streaming Responses: Python Implementation

Let’s dive into a step-by-step implementation using the OpenAI Python SDK to process streaming responses efficiently.

1. Submitting a Streamed Request

When you set

stream=True, the API returns an iterable generator. Each iteration yields a chunk of the response.2. Understanding Chunk Structure

Each streamed chunk contains fields such as

choices, each with a delta (the latest content addition), and finish_reason (signals completion or interruption).3. Iterating Over Streamed Chunks

You can process each part of the response as soon as it arrives:

1import openai

2

3openai.api_key = "sk-..."

4

5response = openai.ChatCompletion.create(

6 model="gpt-4o",

7 messages=[{"role": "user", "content": "Explain streaming in AI."}],

8 stream=True

9)

10

11collected_content = ""

12for chunk in response:

13 if "choices" in chunk:

14 delta = chunk["choices"][0]["delta"]

15 finish_reason = chunk["choices"][0].get("finish_reason")

16 if "content" in delta:

17 print(delta["content"], end="", flush=True)

18 collected_content += delta["content"]

19 if finish_reason:

20 print(f"\n[Stream finished: {finish_reason}]")

214. Error Handling and Moderation

Always wrap streaming logic in try-except blocks to gracefully handle API errors, network issues, or content moderation flags. The API may interrupt streams for safety reasons or if content violates policies.

1try:

2 # streaming logic here

3except openai.error.OpenAIError as e:

4 print(f"Error: {e}")

55. Moderation Considerations

OpenAI’s moderation layer may terminate a stream early. Monitor the

finish_reason field ("stop", "length", "content_filter", etc.) and design your app to handle partial completions or retries as needed.Advanced: Streaming with FastAPI

FastAPI is a modern, high-performance framework for building APIs with Python. Its async capabilities make it ideal for creating scalable, real-time streaming endpoints that leverage the OpenAI API stream.

Why FastAPI for Streaming?

- Native support for asynchronous I/O

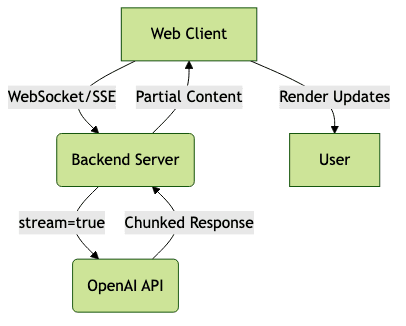

- Easy integration with server-sent events (SSE) or websockets

- Simplifies API data modeling and validation

FastAPI Streaming Example:

1from fastapi import FastAPI

2from fastapi.responses import StreamingResponse

3import openai

4

5app = FastAPI()

6openai.api_key = "sk-..."

7

8async def stream_openai(prompt):

9 response = openai.ChatCompletion.create(

10 model="gpt-4o",

11 messages=[{"role": "user", "content": prompt}],

12 stream=True

13 )

14 for chunk in response:

15 if "choices" in chunk:

16 delta = chunk["choices"][0]["delta"]

17 if "content" in delta:

18 yield delta["content"]

19

20@app.get("/chat-stream")

21def chat_stream(prompt: str):

22 return StreamingResponse(stream_openai(prompt), media_type="text/plain")

23This endpoint streams model output incrementally to the client, enabling true real-time AI experiences.

Streaming in Node.js: A Practical Guide

For developers working in JavaScript, Node.js offers robust event-driven patterns ideal for handling server-sent events (SSE) and streaming responses from OpenAI.

Node.js Streaming Example:

1const { Configuration, OpenAIApi } = require("openai");

2

3const configuration = new Configuration({

4 apiKey: "sk-..."

5});

6const openai = new OpenAIApi(configuration);

7

8async function streamChatCompletion(prompt) {

9 const completion = await openai.createChatCompletion({

10 model: "gpt-4o",

11 messages: [{ role: "user", content: prompt }],

12 stream: true

13 }, { responseType: 'stream' });

14

15 completion.data.on('data', (data) => {

16 const lines = data.toString().split('\n').filter(line => line.trim() !== "");

17 for (const line of lines) {

18 if (line.startsWith("data: ")) {

19 const message = JSON.parse(line.replace("data: ", ""));

20 if (message.choices && message.choices[0].delta && message.choices[0].delta.content) {

21 process.stdout.write(message.choices[0].delta.content);

22 }

23 }

24 }

25 });

26}

27

28streamChatCompletion("Summarize the advantages of streaming.");

29This code establishes a streamed connection, parses each chunk, and outputs content as soon as it’s received.

Best Practices and Common Pitfalls

Implementing streaming endpoints brings unique challenges and opportunities. Here’s how to get the most from your OpenAI API stream integration:

- Content Moderation: Always handle early termination due to moderation. Design your UI to indicate partial completions and allow users to request clarifications or retries.

- Finish Reason: Monitor the

finish_reasonin each chunk. It can be"stop","length","content_filter", or function-related, which may require custom handling. - Function Calls: If using function calling with streaming, process the

function_callfield separately as it arrives. - Streaming to Web Clients: When streaming to browsers, prefer websockets or server-sent events. Avoid sending streamed data via

res.jsonas it buffers the full response. - Performance and Cost Considerations: Streaming may increase the number of API calls, so monitor usage and consider batching or rate limiting for high-traffic applications. Optimize for lower latency and efficient data handling.

Streaming Data Flow in a Web App:

Real-World Use Cases and Integration Opportunities

OpenAI’s streaming capabilities enable a broad range of applications:

- Agentic Applications & Chatbots: Real-time conversations and adaptive agents

- Live Translation & Education Tools: Deliver instant translations or tutoring feedback

- Enterprise Integrations: Connect streaming AI to remote MCP servers, workflow engines, or third-party APIs

- Background Mode & Privacy: Stream discreetly in the background, ensuring privacy and reliability

Enterprise features like advanced privacy controls, background processing, and custom integrations are key for large-scale, production-grade deployments.

Conclusion

The OpenAI API stream unlocks true real-time AI for developers, offering lower latency, better user experiences, and powerful integration options across Python, FastAPI, and Node.js. By following implementation best practices and understanding moderation, chunk formats, and endpoint design, you can build robust, production-ready AI applications.

For deeper dives, always consult the

OpenAI API documentation

and experiment with streaming in your preferred environment. The future of interactive AI is streaming—start building today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ