Introduction to Media Streaming Protocols

Media streaming protocols are the backbone of modern multimedia delivery, enabling seamless transmission of audio and video over the internet. Whether you’re watching a live sports event, streaming a movie, or participating in a real-time video call, media streaming protocols determine the quality, latency, and reliability of your experience. As streaming becomes the dominant mode of content consumption in 2025, understanding these protocols is crucial for developers, engineers, and solution architects.

This article dives deep into the most widely used streaming protocols—HTTP Live Streaming (HLS), Dynamic Adaptive Streaming over HTTP (MPEG-DASH), Real-Time Messaging Protocol (RTMP), Real-Time Transport Protocol (RTP), Secure Reliable Transport (SRT), WebRTC, and Real-Time Streaming Protocol (RTSP). We’ll explore their features, use cases, technical architectures, and best practices for implementation. By the end, you’ll be equipped to choose and configure the right protocol for your streaming application.

What Are Media Streaming Protocols?

Media streaming protocols are standardized methods that define how multimedia data (like video and audio) is transmitted from a server to a client over a network. Unlike simple file downloads, streaming protocols enable real-time delivery, allowing playback to begin before the entire file is received. This is essential for live streaming, video on demand (VOD), and interactive communication.

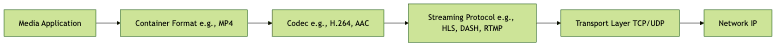

It’s important to distinguish between streaming protocols, codecs, and container formats:

- Streaming protocols (e.g., HLS, DASH, RTMP) handle the transport of data.

- Codecs (e.g., H.264, AAC) compress and encode the audio/video content.

- Container formats (e.g., MP4, MKV) bundle encoded content for storage or streaming.

Below is a mermaid diagram illustrating where streaming protocols fit into the multimedia stack:

Key Features and Functions of Media Streaming Protocols

Media streaming protocols offer a range of features essential for robust multimedia delivery:

- Real-Time Delivery: Enable near-instant playback for live streaming and low-latency applications.

- Adaptive Bitrate Streaming: Adjust video quality on-the-fly based on network conditions (crucial for HLS and DASH).

- Error Correction: Protocols like SRT ensure smooth playback by correcting packet loss.

- Transport Methods: Use TCP or UDP depending on the need for reliability or speed.

- Latency: Some protocols (like RTMP, WebRTC) are optimized for low-latency scenarios.

- Scalability: HTTP-based protocols (HLS, DASH) scale easily using CDNs.

- Compatibility: Broad client and device support matters for global reach.

- Security: Encryption, authentication, and firewall traversal are embedded in modern protocols.

Overview of Major Media Streaming Protocols

HTTP Live Streaming (HLS)

Developed by Apple in 2009, HLS has become the de facto standard for adaptive bitrate streaming, especially across iOS and macOS devices. HLS works by breaking video content into small HTTP-based segments (.ts files) and serving a playlist (.m3u8) that lists available streams at different bitrates. Its compatibility with standard HTTP infrastructure makes it firewall-friendly and scalable via CDNs.

HLS Playlist Example:

1#EXTM3U

2#EXT-X-VERSION:3

3#EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x360

4low/index.m3u8

5#EXT-X-STREAM-INF:BANDWIDTH=2000000,RESOLUTION=1280x720

6mid/index.m3u8

7#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080

8hi/index.m3u8

9Dynamic Adaptive Streaming over HTTP (MPEG-DASH)

MPEG-DASH is an open standard for adaptive HTTP streaming, enabling broad interoperability across platforms and devices. Unlike HLS, DASH is codec-agnostic and supports a wide range of media formats. DASH uses Media Presentation Description (MPD) files to describe segment locations and available quality levels, making it a flexible choice for global streaming services.

DASH Manifest Example:

1<?xml version=\"1.0\" encoding=\"UTF-8\"?>

2<MPD xmlns=\"urn:mpeg:dash:schema:mpd:2011\" type=\"static\" mediaPresentationDuration=\"PT30M\" minBufferTime=\"PT1.5S\">

3 <Period>

4 <AdaptationSet mimeType=\"video/mp4\" codecs=\"avc1.42E01E\">

5 <Representation id=\"1\" bandwidth=\"800000\" width=\"640\" height=\"360\" />

6 <Representation id=\"2\" bandwidth=\"2000000\" width=\"1280\" height=\"720\" />

7 </AdaptationSet>

8 </Period>

9</MPD>

10Real-Time Messaging Protocol (RTMP)

Originally developed by Macromedia (now Adobe), RTMP was designed for low-latency streaming to Flash players. Despite Flash’s decline, RTMP remains popular for ingesting live streams into platforms like YouTube and Twitch, often serving as a first-mile protocol before transcoding to HLS or DASH for playback.

RTMP Push Command Example (using FFmpeg):

1ffmpeg -re -i input.mp4 -c:v libx264 -c:a aac -f flv rtmp://live.example.com/app/streamkey

2Real-Time Transport Protocol (RTP)

RTP is the foundational protocol for real-time IP media transport, especially in VoIP, video conferencing, and professional broadcast. RTP typically works in tandem with RTCP for quality monitoring and with SIP for call setup. It operates over UDP, favoring speed and low latency over reliability.

RTP Packet Header Structure:

10 1 2 3

20 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

3+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

4|V=2|P|X|CC|M| PT | Sequence Number |

5+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

6| Timestamp |

7+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

8| Synchronization Source (SSRC) Identifier |

9+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

10| Contributing Source (CSRC) Identifiers |

11+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

12Secure Reliable Transport (SRT)

SRT is a modern open-source protocol designed for secure, reliable, and low-latency media streaming over unpredictable networks. It features advanced error correction, encryption, and packet loss recovery, making it ideal for professional broadcast, remote production, and contribution workflows in 2025.

WebRTC

WebRTC is a real-time, peer-to-peer streaming protocol built into browsers and mobile platforms. It supports audio, video, and data channels, enabling ultra-low latency video calls, conferencing, and interactive live streams with built-in NAT traversal and security.

Real-Time Streaming Protocol (RTSP)

RTSP is a network control protocol that manages streaming media servers and clients. It provides commands for controlling playback (play, pause, record, etc.) and is widely used in IP cameras, surveillance, and enterprise streaming due to its low-latency performance.

Comparing Media Streaming Protocols

Protocol Comparison Table

Below is a comparison of major media streaming protocols using a mermaid diagram:

Choosing the Right Protocol (Factors & Use Cases)

Selecting a streaming protocol depends on your application’s requirements:

- Live Streaming: RTMP (ingest), SRT (contribution), HLS/DASH (distribution)

- Video on Demand (VOD): HLS or DASH (adaptive bitrate, scalability)

- Conferencing: WebRTC (peer-to-peer, ultra-low latency)

- Surveillance: RTSP (control, low latency)

- CDN Delivery: HLS and DASH (HTTP-based, firewall-friendly)

Consider latency, scalability, device compatibility, security, and network conditions before implementation.

Implementation Best Practices for Media Streaming Protocols

- Transport Selection: Choose TCP for reliability (HLS, DASH) or UDP for low latency (RTP, SRT, WebRTC).

- Low Latency Configuration: Reduce segment duration (HLS/DASH), enable low-latency modes, tune buffer sizes.

- Adaptive Bitrate Setup: Provide multiple quality renditions and update manifests dynamically.

- Security: Use encryption (TLS/DTLS), authentication, and ensure firewall/NAT traversal where required.

- Scalability: Leverage CDNs, IP multicast (where available), and edge caching for large-scale delivery.

Future Trends in Media Streaming Protocols

In 2025, media streaming protocols are evolving to support AI-powered adaptive streaming, ultra-low latency formats, immersive experiences (AR/VR), and intelligent error correction. As bandwidth and device diversity grow, expect further convergence of protocols and smarter, context-aware delivery networks.

Conclusion

Choosing the right media streaming protocol is critical for delivering high-quality, low-latency, and secure multimedia experiences in 2025. Stay informed to architect reliable and future-proof streaming solutions.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ