Next-Gen Conversational AI: A Practical Guide to OpenAI’s Realtime API

Learn how to build real-time AI applications using the OpenAI Realtime API. This in-depth guide explores implementation details for creating a real-time translation agent, with code examples, best practices, and advanced features.

In the rapidly evolving landscape of artificial intelligence, real-time interaction capabilities represent a significant leap forward. OpenAI's Realtime API is at the forefront of this evolution, enabling developers to create dynamic, responsive applications that can process and respond to inputs instantaneously. This powerful technology opens up a world of possibilities for creating more natural, engaging AI experiences.

In this comprehensive guide, we'll explore the OpenAI Realtime API, from its core concepts to practical implementation examples. Whether you're building a voice assistant, a real-time translator, or an interactive customer service solution, this article will provide you with the knowledge and code examples you need to get started.

Understanding the OpenAI Realtime API

What is the OpenAI Realtime API?

The OpenAI Realtime API is a specialized interface that enables developers to build applications capable of processing and responding to audio and text inputs in real-time. Unlike traditional API endpoints that process requests in a request-response pattern, the Realtime API operates over

WebSockets

, allowing for continuous, bidirectional communication between the client and server.This architecture enables several critical capabilities:

- Low-latency interactions: Process and respond to user inputs with minimal delay

- Streaming audio processing: Handle continuous audio input and output

- Dynamic session management: Update instructions and parameters on the fly

- Multimodal conversations: Seamlessly combine text and audio interactions

Key Features and Benefits

The OpenAI Realtime API offers several advantages over traditional API approaches:

- Natural conversation flow: Create more human-like interactions with minimal latency

- Interruption handling: Allow users to interrupt AI responses, similar to human conversations

- Adaptive experiences: Modify AI behavior in real-time based on context or user feedback

- Tool integration: Extend capabilities through function calling to external systems

- Event-driven architecture: React to specific events in the conversation for fine-grained control

Use Cases: Where Realtime AI Excels

The capabilities of the Realtime API make it ideal for a variety of applications:

- AI assistants: Create responsive voice or chat assistants that feel natural to interact with

- Real-time translation: Bridge language barriers in live conversations

- Interactive learning: Build educational tools that respond immediately to student inputs

- Accessibility solutions: Develop tools that provide real-time transcription and assistance

- Customer service: Implement sophisticated support systems that handle complex interactions

Real-World Example: Building a Translation Agent

To demonstrate the power of the OpenAI Realtime API, let's examine a real-world implementation: an AI Translation Agent built with Python that can facilitate conversations between people speaking different languages.

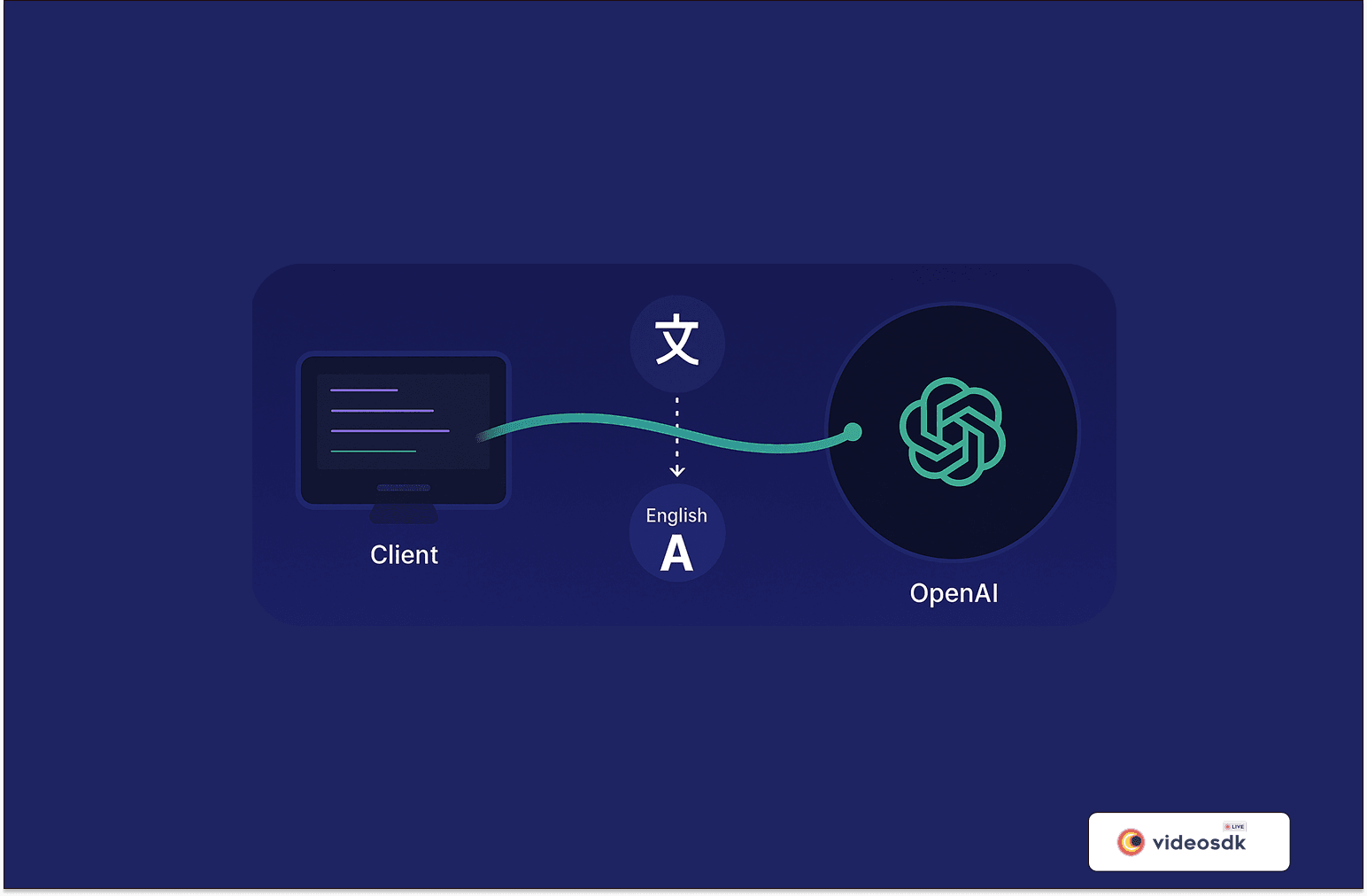

System Architecture

Our translation agent consists of several key components:

- VideoSDK Integration: Handles real-time audio/video communication between participants

- Audio Processing Pipeline: Captures and processes audio data

- OpenAI Realtime API Connection: Manages communication with OpenAI's services

- Translation Logic: Configures the AI model to perform real-time translation

Core Components Implementation

Let's dive into the key implementations that power our translation agent.

1. Setting Up the OpenAI Intelligence Module

At the heart of our system is the

OpenAIIntelligence class that manages the connection to OpenAI's Realtime API:1class OpenAIIntelligence:

2 def __init__(

3 self,

4 loop: AbstractEventLoop,

5 api_key,

6 model: str = "gpt-4o-realtime-preview-2024-10-01",

7 instructions="""\

8 Actively listen to the user's questions and provide concise, relevant responses.

9 Acknowledge the user's intent before answering. Keep responses under 2 sentences.\

10 """,

11 base_url: str = "api.openai.com",

12 voice: Voices = Voices.Alloy,

13 temperature: float = 0.8,

14 modalities=["text", "audio"],

15 max_response_output_tokens=512,

16 turn_detection: ServerVADUpdateParams = ServerVADUpdateParams(

17 type="server_vad",

18 threshold=0.5,

19 prefix_padding_ms=300,

20 silence_duration_ms=200,

21 ),

22 audio_track: CustomAudioStreamTrack = None,

23 ):

24 self.model = model

25 self.loop = loop

26 self.api_key = api_key

27 self.instructions = instructions

28 self.base_url = base_url

29 self.temperature = temperature

30 self.voice = voice

31 self.modalities = modalities

32 self.max_response_output_tokens = max_response_output_tokens

33 self.turn_detection = turn_detection

34 self.ws = None

35 self.audio_track = audio_track

36

37 # Initialize session and HTTP client

38 self._http_session = aiohttp.ClientSession(loop=self.loop)

39 self.session_update_params = SessionUpdateParams(

40 model=self.model,

41 instructions=self.instructions,

42 input_audio_format=AudioFormats.PCM16,

43 output_audio_format=AudioFormats.PCM16,

44 temperature=self.temperature,

45 voice=self.voice,

46 turn_detection=self.turn_detection,

47 modalities=self.modalities,

48 max_response_output_tokens=self.max_response_output_tokens,

49 )

50 self.pending_instructions = None

51This class initializes all the parameters needed for our OpenAI Realtime API session, including:

- The AI model to use

- System instructions to guide AI behavior

- Audio format specifications

- Voice selection for audio output

- Turn detection parameters for conversation flow

- Modalities supported (text, audio)

2. Establishing a WebSocket Connection

The connection to OpenAI's Realtime API is established through a WebSocket:

1async def connect(self):

2 url = f"wss://api.openai.com/v1/realtime?model=gpt-4o-realtime-preview"

3 logger.info("Establishing OpenAI WS connection... ")

4 self.ws = await self._http_session.ws_connect(

5 url=url,

6 headers={

7 "Authorization": f"Bearer {self.api_key}",

8 "OpenAI-Beta": "realtime=v1",

9 },

10 )

11

12 if self.pending_instructions is not None:

13 await self.update_session_instructions(self.pending_instructions)

14

15 logger.info("OpenAI WS connection established")

16 self.receive_message_task = self.loop.create_task(

17 self.receive_message_handler()

18 )

19

20 await self.update_session(self.session_update_params)

21 await self.receive_message_task

22This method:

- Formulates the WebSocket URL with the appropriate model

- Establishes a connection with authentication headers

- Sets up session parameters

- Creates a task to handle incoming messages

3. Processing Audio for Translation

A critical component of our real-time translation is the audio processing pipeline:

1async def add_audio_listener(self, stream: Stream):

2 while True:

3 try:

4 await asyncio.sleep(0.01)

5 if not self.intelligence.ws:

6 continue

7

8 # Get audio frame from the stream

9 frame = await stream.track.recv()

10 audio_data = frame.to_ndarray()[0]

11

12 # Process audio for optimal quality

13 audio_data_float = (

14 audio_data.astype(np.float32) / np.iinfo(np.int16).max

15 )

16 audio_mono = librosa.to_mono(audio_data_float.T)

17 audio_resampled = librosa.resample(

18 audio_mono, orig_sr=48000, target_sr=16000

19 )

20 pcm_frame = (

21 (audio_resampled * np.iinfo(np.int16).max)

22 .astype(np.int16)

23 .tobytes()

24 )

25

26 # Send to OpenAI

27 await self.intelligence.send_audio_data(pcm_frame)

28

29 except Exception as e:

30 print("Audio processing error:", e)

31 break

32This audio listener:

- Continuously receives audio frames from the stream

- Converts the audio to a floating-point format for processing

- Converts stereo to mono audio if needed

- Resamples the audio to the required sample rate (16kHz)

- Converts back to the PCM16 format required by OpenAI

- Sends the processed audio data to the OpenAI API

4. Sending Audio Data to OpenAI

Once we've processed the audio, we send it to the OpenAI Realtime API:

1async def send_audio_data(self, audio_data: bytes):

2 """audio_data is assumed to be pcm16 24kHz mono little-endian"""

3 base64_audio_data = base64.b64encode(audio_data).decode("utf-8")

4 message = InputAudioBufferAppend(audio=base64_audio_data)

5 await self.send_request(message)

6

7async def send_request(self, request: ClientToServerMessage):

8 request_json = to_json(request)

9 await self.ws.send_str(request_json)

10This method:

- Encodes the binary audio data as base64

- Creates an

InputAudioBufferAppendmessage - Serializes the message to JSON

- Sends it through the

WebSocket

connection

5. Handling OpenAI API Responses

The OpenAI Realtime API communicates through events, which we handle accordingly:

1async def receive_message_handler(self):

2 while True:

3 async for response in self.ws:

4 try:

5 await asyncio.sleep(0.01)

6 if response.type == aiohttp.WSMsgType.TEXT:

7 self.handle_response(response.data)

8 elif response.type == aiohttp.WSMsgType.ERROR:

9 logger.error("Error while receiving data from openai", response)

10 except Exception as e:

11 traceback.print_exc()

12 print("Error in receiving message:", e)

13

14def handle_response(self, message: str):

15 message = json.loads(message)

16

17 match message["type"]:

18 case EventType.SESSION_CREATED:

19 logger.info(f"Server Message: {message['type']}")

20

21 case EventType.SESSION_UPDATE:

22 logger.info(f"Server Message: {message['type']}")

23

24 case EventType.RESPONSE_AUDIO_DELTA:

25 logger.info(f"Server Message: {message['type']}")

26 self.on_audio_response(base64.b64decode(message["delta"]))

27

28 case EventType.RESPONSE_AUDIO_TRANSCRIPT_DONE:

29 logger.info(f"Server Message: {message['type']}")

30 print(f"Response Transcription: {message['transcript']}")

31

32 case EventType.ITEM_INPUT_AUDIO_TRANSCRIPTION_COMPLETED:

33 logger.info(f"Server Message: {message['type']}")

34 print(f"Client Transcription: {message['transcript']}")

35

36 case EventType.INPUT_AUDIO_BUFFER_SPEECH_STARTED:

37 logger.info(f"Server Message: {message['type']}")

38 logger.info("Clearing audio queue")

39 self.clear_audio_queue()

40

41 case EventType.ERROR:

42 logger.error(f"Server Error Message: ", message["error"])

43This implementation:

- Continuously listens for messages from the

WebSocket

- Processes different event types from the API

- Handles audio responses by routing them to the appropriate handler

- Logs transcription events and errors

6. Dynamic Translation Configuration

The most powerful aspect of our implementation is how we dynamically configure the translation instructions based on the participants' languages:

1def on_participant_joined(self, participant: Participant):

2 peer_name = participant.display_name

3 native_lang = participant.meta_data["preferredLanguage"]

4 self.participants_data[participant.id] = {

5 "name": peer_name,

6 "lang": native_lang

7 }

8 print("Participant joined:", peer_name)

9 print("Native language:", native_lang)

10

11 if len(self.participants_data) == 2:

12 # Extract the info for each participant

13 participant_ids = list(self.participants_data.keys())

14 p1 = self.participants_data[participant_ids[0]]

15 p2 = self.participants_data[participant_ids[1]]

16

17 # Build translator-specific instructions

18 translator_instructions = f"""

19 You are a real-time translator bridging a conversation between:

20 - {p1['name']} (speaks {p1['lang']})

21 - {p2['name']} (speaks {p2['lang']})

22

23 You have to listen and speak those exactly word in different language

24 eg. when {p1['lang']} is spoken then say that exact in language {p2['lang']}

25 similar when {p2['lang']} is spoken then say that exact in language {p1['lang']}

26 Keep in account who speaks what and use

27 NOTE -

28 Your job is to translate, from one language to another, don't engage in any conversation

29 """

30

31 # Dynamically tell OpenAI to use these instructions

32 asyncio.create_task(self.intelligence.update_session_instructions(translator_instructions))

33This function:

- Tracks participants and their preferred languages

- When two participants are present, it creates customized instructions for the AI

- Specifies exactly how the translation should work between the two languages

- Updates the session instructions dynamically

We update the session instructions with this method:

1async def update_session_instructions(self, new_instructions: str):

2 """

3 Dynamically update the system instructions (the system prompt)

4 for translation into the target language.

5 """

6 if self.ws is None:

7 self.pending_instructions = new_instructions

8 return

9

10 self.session_update_params.instructions = new_instructions

11 await self.update_session(self.session_update_params)

127. Custom Audio Track for Responses

For the translation to work in real-time, we need to handle the audio responses from OpenAI. We implement a custom audio track:

1class CustomAudioStreamTrack(CustomAudioTrack):

2 def __init__(

3 self, loop, handle_interruption: Optional[bool] = True

4 ):

5 super().__init__()

6 self.loop = loop

7 self._start = None

8 self._timestamp = 0

9 self.frame_buffer = []

10 self.audio_data_buffer = bytearray()

11 self.frame_time = 0

12 self.sample_rate = 24000

13 self.channels = 1

14 self.sample_width = 2

15 self.time_base_fraction = Fraction(1, self.sample_rate)

16 self.samples = int(AUDIO_PTIME * self.sample_rate)

17 self.chunk_size = int(self.samples * self.channels * self.sample_width)

18 self._process_audio_task_queue = asyncio.Queue()

19 self._process_audio_thread = threading.Thread(target=self.run_process_audio)

20 self._process_audio_thread.daemon = True

21 self._process_audio_thread.start()

22 self.skip_next_chunk = False

23When audio responses are received from OpenAI, they're sent to this custom track:

1def on_audio_response(self, audio_bytes: bytes):

2 self.loop.create_task(

3 self.audio_track.add_new_bytes(iter([audio_bytes]))

4 )

58. The Complete AI Agent

Finally, we bring all these components together in our

AIAgent class:1class AIAgent:

2 def __init__(self, meeting_id: str, authToken: str, name: str):

3 self.loop = asyncio.get_event_loop()

4 self.audio_track = CustomAudioStreamTrack(

5 loop=self.loop,

6 handle_interruption=True

7 )

8 self.meeting_config = MeetingConfig(

9 name=name,

10 meeting_id=meeting_id,

11 token=authToken,

12 mic_enabled=True,

13 webcam_enabled=False,

14 custom_microphone_audio_track=self.audio_track

15 )

16 self.current_participant = None

17 self.audio_listener_tasks = {}

18 self.agent = VideoSDK.init_meeting(**self.meeting_config)

19 self.agent.add_event_listener(

20 MeetingHandler(

21 on_meeting_joined=self.on_meeting_joined,

22 on_meeting_left=self.on_meeting_left,

23 on_participant_joined=self.on_participant_joined,

24 on_participant_left=self.on_participant_left,

25 ))

26

27 # Initialize OpenAI connection parameters

28 self.intelligence = OpenAIIntelligence(

29 loop=self.loop,

30 api_key=api_key,

31 base_url="api.openai.com",

32 input_audio_transcription=InputAudioTranscription(model="whisper-1"),

33 audio_track=self.audio_track

34 )

35

36 self.participants_data = {}

37This class:

- Initializes the audio processing components

- Configures the meeting parameters

- Sets up event listeners for participant actions

- Creates the OpenAI intelligence connection

- Manages participant data for language detection

Best Practices for Using OpenAI Realtime API

Based on our implementation experience, here are some best practices to follow when working with the OpenAI Realtime API:

1. Optimize Audio Processing

To ensure optimal speech recognition and synthesis:

- Format your audio correctly: Use PCM16 format with the appropriate sample rate (16kHz for input, typically)

- Process audio in appropriate chunks: Balance between latency and processing overhead

- Consider mono conversion: Convert stereo audio to mono for more efficient processing

2. Implement Robust Error Handling

Real-time applications need to be resilient:

1try:

2 # Attempt operation

3 await self.intelligence.send_audio_data(pcm_frame)

4except Exception as e:

5 print("Audio processing error:", e)

6 # Handle gracefully, possibly reconnecting or notifying the user

73. Use Clear, Specific System Instructions

The quality of AI responses depends heavily on the clarity of instructions:

1# Clear instructions for a translation task

2translator_instructions = f"""

3 You are a real-time translator bridging a conversation between:

4 - {p1['name']} (speaks {p1['lang']})

5 - {p2['name']} (speaks {p2['lang']})

6

7 When {p1['lang']} is spoken, translate to {p2['lang']}.

8 When {p2['lang']} is spoken, translate to {p1['lang']}.

9 Translate exactly what was said without adding your own commentary.

10"""

114. Leverage Asynchronous Programming

The real-time nature of the API makes asynchronous programming essential:

- Use

async/awaitfor WebSocket communication - Implement non-blocking I/O operations

- Utilize tasks for concurrent processing

5. Secure API Key Management

Always protect your OpenAI API keys:

- Store keys in environment variables or secure vaults

- Never hardcode keys in your application

- Implement proper access controls for your application

Advanced Features: Going Beyond Basic Implementation

Once you've mastered the basics of the OpenAI Realtime API, you can explore more advanced features:

1. Tool Integration for Enhanced Functionality

You can extend your application by integrating external tools:

1# Example from OpenAI documentation

2client.addTool(

3 {

4 name: 'get_weather',

5 description: 'Retrieves the weather for a given location.',

6 parameters: {

7 type: 'object',

8 properties: {

9 lat: { type: 'number', description: 'Latitude' },

10 lng: { type: 'number', description: 'Longitude' },

11 location: { type: 'string', description: 'Name of the location' },

12 },

13 required: ['lat', 'lng', 'location'],

14 },

15 },

16 async ({ lat, lng, location }) => {

17 // Implement tool functionality

18 const result = await fetchWeatherData(lat, lng);

19 return result;

20 },

21);

222. Multimodal Conversations

Combine text and audio for richer interactions:

1# Configure session for multimodal support

2self.session_update_params = SessionUpdateParams(

3 model=self.model,

4 instructions=self.instructions,

5 input_audio_format=AudioFormats.PCM16,

6 output_audio_format=AudioFormats.PCM16,

7 modalities=["text", "audio"],

8 # Additional parameters...

9)

103. Dynamic Session Updates

Update session parameters based on user preferences or context:

1async def update_voice_preference(self, new_voice: Voices):

2 """Update the voice used for responses."""

3 self.session_update_params.voice = new_voice

4 await self.update_session(self.session_update_params)

5Conclusion

The OpenAI Realtime API represents a significant advancement in creating natural, responsive AI applications. By leveraging WebSocket connections, event-driven architecture, and streaming audio capabilities, developers can build experiences that feel more human and less mechanical.

Our translation agent example demonstrates just one application of this powerful technology. The ability to process and respond to audio in real-time enables a wide range of innovative use cases, from voice assistants to accessibility tools, educational applications, and beyond.

As you embark on your journey with the OpenAI Realtime API, remember that success lies in thoughtful design, robust implementation, and clear communication with the AI. With these principles in mind, you're well-equipped to create compelling real-time experiences that delight and empower your users.

Getting Started

Ready to build your own real-time AI application? Here's how to get started:

- Set up your environment: Obtain an OpenAI API key and set up the necessary development tools

- Understand the API: Familiarize yourself with the events and message formats

- Start simple: Begin with basic text interactions before adding audio capabilities

- Iterate: Test, refine, and expand your implementation based on user feedback

The era of truly interactive AI is here, and the OpenAI Realtime API is your gateway to being part of this exciting frontier.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ