AI Interview Assistant: Building Intelligent Interview Agents with VideoSDK

A comprehensive guide on building intelligent AI interview assistants using VideoSDK's real-time communication platform. Learn how to create AI agents that can conduct video interviews, analyze responses, and provide structured feedback to transform your hiring process.

The traditional hiring process is undergoing a revolution. As organizations face increasing pressure to efficiently identify top talent while reducing bias and improving candidate experience, AI interview assistants have emerged as a powerful solution. These intelligent agents can conduct preliminary interviews, assess candidates' responses, and provide valuable insights to hiring managers—all while ensuring consistency and objectivity.

In this developer-focused guide, we'll explore how to build sophisticated AI interview assistants using VideoSDK's real-time communication platform. You'll learn how to create intelligent agents that can conduct video interviews, analyze responses, and provide structured feedback—potentially transforming your hiring process.

What is an AI Interview Assistant?

An AI interview assistant is a software application that leverages artificial intelligence to conduct automated interviews with job candidates. Unlike simple chatbots, these assistants can engage in natural conversations, ask follow-up questions based on responses, analyze verbal and non-verbal cues, and provide consistent evaluation metrics.

The benefits of implementing AI interview assistants are substantial:

- Reduced time-to-hire: Screen hundreds of candidates simultaneously without scheduling constraints

- Elimination of unconscious bias: Apply the same evaluation criteria to all candidates

- Enhanced candidate experience: Provide flexible interviewing times and immediate feedback

- Deeper insights: Analyze linguistic patterns, sentiment, and other data points human interviewers might miss

- Scalability: Conduct thousands of preliminary interviews without additional resources

Core Components of an AI Interview Agent

To build an effective AI interview assistant, we need several integrated components:

1. Real-time Communication Platform (VideoSDK)

VideoSDK provides the foundation for our interview assistant, enabling:

- High-quality video and audio streaming

- Cross-platform compatibility (web, mobile, desktop)

- Recording capabilities for review and analysis

- Real-time data exchange between the candidate and AI

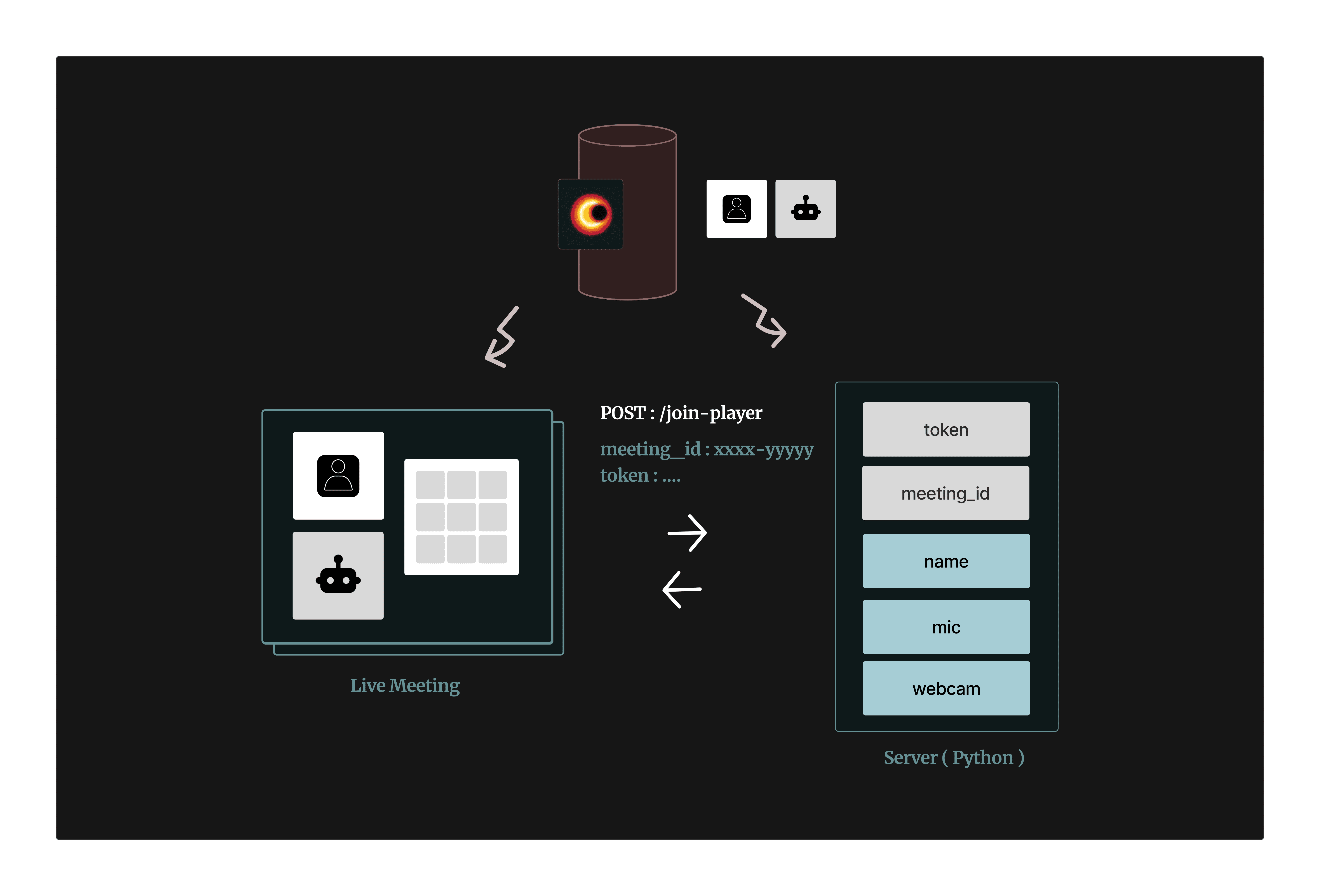

Figure: Client-server architecture showing how the AI agent integrates with VideoSDK's meeting platform

2. AI Services Integration

Our interview assistant requires multiple AI capabilities:

- Speech-to-Text (STT): Converting candidate responses to text for analysis

- Natural Language Understanding (NLU): Interpreting the meaning and intent of responses

- Large Language Models (LLMs): Generating relevant follow-up questions and feedback

- Text-to-Speech (TTS): Converting AI responses to natural-sounding voice

- Visual Analysis (optional): Assessing non-verbal cues and engagement

3. Interview Management System

The orchestration layer that:

- Manages the interview flow and question sequencing

- Stores candidate responses and evaluations

- Applies scoring algorithms and assessment criteria

- Generates reports for hiring managers

Now, let's dive into how to build this system with VideoSDK.

Building an AI Interview Assistant with VideoSDK

1. Setting Up the VideoSDK Environment

First, we need to create the foundation for our interview platform:

1from videosdk import (

2 VideoSDK,

3 Meeting,

4 MeetingConfig,

5 MeetingEventHandler,

6 ParticipantEventHandler,

7 Stream

8)

9import asyncio

10import logging

11

12class AIInterviewer:

13 """

14 AI interview assistant that can join video meetings and conduct interviews.

15 """

16 def __init__(self, meeting_id: str, auth_token: str, interviewer_name: str = "AI Interviewer"):

17 # Set up logging

18 logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

19 self.logger = logging.getLogger(__name__)

20

21 # Get the current event loop for async operations

22 self.loop = asyncio.get_event_loop()

23

24 # Create custom audio track for the agent to speak

25 self.audio_track = CustomAudioStreamTrack(

26 loop=self.loop,

27 handle_interruption=True

28 )

29

30 # Configure meeting settings

31 self.meeting_config = MeetingConfig(

32 name=interviewer_name,

33 meeting_id=meeting_id,

34 token=auth_token,

35 mic_enabled=True,

36 webcam_enabled=False, # Optional: set to True if you want a video avatar

37 custom_microphone_audio_track=self.audio_track,

38 )

39

40 # Initialize the meeting

41 self.meeting = VideoSDK.init_meeting(**self.meeting_config)

42

43 # Track active interviews

44 self.active_interviews = {}

45

46 # Initialize AI components

47 self.initialize_ai_services()

48

49 # Add event listeners for meeting events

50 self.meeting.add_event_listener(

51 self.create_meeting_event_handler()

52 )

532. Implementing the Audio Processing Pipeline

To understand candidates' responses, we need to capture and process audio:

1async def add_audio_listener(self, stream: Stream, candidate_name: str):

2 """Process audio from a candidate and send it for transcription."""

3 self.logger.info(f"Started processing audio from candidate: {candidate_name}")

4

5 try:

6 while True:

7 # Get audio frame from VideoSDK stream

8 frame = await stream.track.recv()

9 audio_data = frame.to_ndarray()[0]

10

11 # Process audio for optimal quality

12 audio_data_float = (audio_data.astype(np.float32) / np.iinfo(np.int16).max)

13 audio_mono = librosa.to_mono(audio_data_float.T)

14 audio_resampled = librosa.resample(

15 audio_mono, orig_sr=48000, target_sr=16000

16 )

17

18 # Convert to PCM format for speech recognition

19 pcm_frame = ((audio_resampled * np.iinfo(np.int16).max)

20 .astype(np.int16)

21 .tobytes())

22

23 # Send to speech recognition service

24 await self.intelligence.process_audio(

25 audio_data=pcm_frame,

26 candidate_id=stream.participant.id,

27 candidate_name=candidate_name

28 )

29 except Exception as e:

30 self.logger.error(f"Error processing audio: {str(e)}")

313. Creating the AI Intelligence Layer

The intelligence layer handles speech recognition, language understanding, and response generation:

1class InterviewIntelligence:

2 """Manages AI capabilities for the interview assistant."""

3

4 def __init__(

5 self,

6 loop: asyncio.AbstractEventLoop,

7 audio_track: CustomAudioStreamTrack,

8 openai_api_key: str,

9 system_prompt: str = None

10 ):

11 self.loop = loop

12 self.audio_track = audio_track

13 self.openai_api_key = openai_api_key

14

15 # Default system prompt for interview context

16 self.system_prompt = system_prompt or """

17 You are an AI Interview Assistant conducting a job interview.

18 Ask relevant questions based on the candidate's responses.

19 Focus on assessing technical skills, problem-solving abilities,

20 and cultural fit. Keep your responses professional and encouraging.

21 Avoid any biased or discriminatory language.

22 """

23

24 # Initialize OpenAI client for language processing

25 self.client = OpenAI(api_key=self.openai_api_key)

26

27 # Store conversation history for each candidate

28 self.candidate_conversations = {}

29

30 async def process_audio(self, audio_data: bytes, candidate_id: str, candidate_name: str):

31 """Process audio data from a candidate."""

32 # Initialize conversation history if this is a new candidate

33 if candidate_id not in self.candidate_conversations:

34 self.candidate_conversations[candidate_id] = [

35 {"role": "system", "content": self.system_prompt}

36 ]

37

38 # Transcribe audio using Whisper API

39 transcript = await self.transcribe_audio(audio_data)

40

41 if not transcript:

42 return

43

44 # Add candidate's response to conversation history

45 self.candidate_conversations[candidate_id].append(

46 {"role": "user", "name": candidate_name, "content": transcript}

47 )

48

49 # Generate AI interviewer response

50 response = await self.generate_response(candidate_id)

51

52 # Convert response to speech and send it through the audio track

53 await self.text_to_speech(response)

54

55 async def transcribe_audio(self, audio_data: bytes) -> str:

56 """Transcribe audio to text using OpenAI Whisper."""

57 try:

58 response = await self.loop.run_in_executor(

59 None,

60 lambda: self.client.audio.transcriptions.create(

61 model="whisper-1",

62 file=("audio.wav", audio_data),

63 language="en"

64 )

65 )

66 return response.text

67 except Exception as e:

68 logging.error(f"Transcription error: {str(e)}")

69 return None

70

71 async def generate_response(self, candidate_id: str) -> str:

72 """Generate interviewer response based on conversation history."""

73 try:

74 response = await self.loop.run_in_executor(

75 None,

76 lambda: self.client.chat.completions.create(

77 model="gpt-4o-mini",

78 messages=self.candidate_conversations[candidate_id],

79 max_tokens=150,

80 temperature=0.7

81 )

82 )

83

84 interviewer_response = response.choices[0].message.content

85

86 # Add interviewer response to conversation history

87 self.candidate_conversations[candidate_id].append(

88 {"role": "assistant", "content": interviewer_response}

89 )

90

91 return interviewer_response

92

93 except Exception as e:

94 logging.error(f"Response generation error: {str(e)}")

95 return "I'm sorry, I'm having trouble processing that. Could you please elaborate on your previous answer?"

96

97 async def text_to_speech(self, text: str):

98 """Convert text to speech using OpenAI TTS."""

99 try:

100 response = await self.loop.run_in_executor(

101 None,

102 lambda: self.client.audio.speech.create(

103 model="tts-1",

104 voice="alloy",

105 input=text

106 )

107 )

108

109 # Get the audio data

110 audio_data = response.content

111

112 # Send to audio track for playback

113 await self.audio_track.add_new_bytes(iter([audio_data]))

114

115 except Exception as e:

116 logging.error(f"Text-to-speech error: {str(e)}")

1174. Handling Meeting Events

We need to respond to meeting events like participant joining or leaving:

1def create_meeting_event_handler(self):

2 """Create event handlers for meeting events."""

3 class InterviewMeetingHandler(MeetingEventHandler):

4 def __init__(self, interviewer):

5 self.interviewer = interviewer

6

7 def on_meeting_joined(self, data):

8 self.interviewer.logger.info("AI Interviewer joined the meeting")

9 # Could trigger welcome message or interview initialization

10

11 def on_participant_joined(self, participant):

12 self.interviewer.logger.info(f"Candidate joined: {participant.display_name}")

13

14 # Create participant event handler to track streams

15 participant_handler = self.create_participant_handler(participant)

16 participant.add_event_listener(participant_handler)

17

18 def create_participant_handler(self, participant):

19 class InterviewParticipantHandler(ParticipantEventHandler):

20 def __init__(self, interviewer, participant):

21 self.interviewer = interviewer

22 self.participant = participant

23

24 def on_stream_enabled(self, stream):

25 if stream.kind == "audio" and not self.participant.local:

26 # Start processing candidate's audio

27 self.interviewer.loop.create_task(

28 self.interviewer.add_audio_listener(

29 stream,

30 self.participant.display_name

31 )

32 )

33

34 def on_stream_disabled(self, stream):

35 if stream.kind == "audio" and not self.participant.local:

36 # Handle stream ending

37 self.interviewer.logger.info(

38 f"Audio stream from {self.participant.display_name} ended"

39 )

40

41 return InterviewParticipantHandler(self.interviewer, participant)

42

43 return InterviewMeetingHandler(self)

445. Creating an API for Interview Management

Now let's integrate our AI interviewer into a simple API using FastAPI:

1from fastapi import FastAPI, BackgroundTasks, HTTPException

2from pydantic import BaseModel

3from typing import Optional

4import httpx

5import os

6

7app = FastAPI()

8

9# Store active interviews

10active_interviews = {}

11

12class InterviewRequest(BaseModel):

13 job_title: str

14 candidate_email: Optional[str] = None

15 interview_duration_minutes: Optional[int] = 30

16 custom_questions: Optional[list] = None

17

18@app.post("/create-interview")

19async def create_interview(request: InterviewRequest, background_tasks: BackgroundTasks):

20 """Create a new AI interview session."""

21 try:

22 # Create a VideoSDK room

23 room_id = await create_videosdk_room()

24

25 # Generate a unique interview ID

26 interview_id = f"interview_{room_id}"

27

28 # Configure system prompt based on job title and custom questions

29 system_prompt = f"""

30 You are conducting an interview for the position of {request.job_title}.

31 Ask relevant technical and behavioral questions to assess the candidate's fit.

32 The interview should last approximately {request.interview_duration_minutes} minutes.

33 """

34

35 if request.custom_questions:

36 system_prompt += "\nInclude the following questions in your interview:\n"

37 for q in request.custom_questions:

38 system_prompt += f"- {q}\n"

39

40 # Create the AI interviewer in the background

41 background_tasks.add_task(

42 start_ai_interviewer,

43 room_id=room_id,

44 auth_token=os.getenv("VIDEOSDK_AUTH_TOKEN"),

45 interviewer_name="AI Hiring Assistant",

46 system_prompt=system_prompt

47 )

48

49 # Send invitation email if candidate email is provided

50 if request.candidate_email:

51 background_tasks.add_task(

52 send_interview_invitation,

53 email=request.candidate_email,

54 room_id=room_id,

55 job_title=request.job_title

56 )

57

58 # Return interview details

59 return {

60 "interview_id": interview_id,

61 "room_id": room_id,

62 "meeting_link": f"https://interviews.example.com/join/{room_id}",

63 "status": "created"

64 }

65

66 except Exception as e:

67 raise HTTPException(status_code=500, detail=f"Failed to create interview: {str(e)}")

68

69async def create_videosdk_room():

70 """Create a VideoSDK room for the interview."""

71 auth_token = os.getenv("VIDEOSDK_AUTH_TOKEN")

72

73 async with httpx.AsyncClient() as client:

74 response = await client.post(

75 "https://api.videosdk.live/v2/rooms",

76 headers={"Authorization": auth_token}

77 )

78

79 data = response.json()

80 return data["roomId"]

81

82async def start_ai_interviewer(room_id, auth_token, interviewer_name, system_prompt):

83 """Start the AI interviewer in the specified room."""

84 interviewer = AIInterviewer(

85 meeting_id=room_id,

86 auth_token=auth_token,

87 interviewer_name=interviewer_name

88 )

89

90 # Configure the intelligence with the custom system prompt

91 interviewer.intelligence.system_prompt = system_prompt

92

93 # Store reference to active interview

94 active_interviews[room_id] = interviewer

95

96 # Join the meeting

97 await interviewer.join()

98Advanced Features for AI Interview Assistants

Once you have the basic AI interview assistant working, you can enhance it with these advanced features:

1. Structured Interview Assessment

Implement a scoring system to evaluate candidates based on predefined criteria:

1class CandidateAssessment:

2 """Evaluates candidate responses against job criteria."""

3

4 def __init__(self, criteria: dict):

5 """

6 Initialize with assessment criteria.

7

8 Args:

9 criteria: Dictionary of criteria and their weights, e.g.,

10 {"technical_skills": 0.4, "communication": 0.3, "problem_solving": 0.3}

11 """

12 self.criteria = criteria

13 self.scores = {k: 0 for k in criteria.keys()}

14 self.evidence = {k: [] for k in criteria.keys()}

15

16 async def evaluate_response(self, response: str, context: str):

17 """

18 Evaluate a candidate response against the criteria.

19

20 Args:

21 response: The candidate's response text

22 context: The question or conversation context

23 """

24 # Example evaluation using OpenAI

25 prompt = f"""

26 Question/Context: {context}

27 Candidate Response: {response}

28

29 Evaluate this response for a job interview on the following criteria:

30 {', '.join(self.criteria.keys())}

31

32 For each criterion, provide:

33 1. A score from 1-10

34 2. Brief justification (1-2 sentences)

35 3. Key strengths and weaknesses

36

37 Format: JSON with keys for each criterion, containing score, justification, strengths, and weaknesses.

38 """

39

40 # Send to OpenAI for evaluation

41 response = client.chat.completions.create(

42 model="gpt-4",

43 messages=[{"role": "system", "content": "You are an expert HR evaluator."},

44 {"role": "user", "content": prompt}],

45 response_format={"type": "json_object"}

46 )

47

48 # Parse evaluation

49 evaluation = json.loads(response.choices[0].message.content)

50

51 # Update scores and evidence

52 for criterion, data in evaluation.items():

53 if criterion in self.scores:

54 # Normalize score to 0-1 range

55 score = data["score"] / 10.0

56 # Update with running average

57 current_evidence_count = len(self.evidence[criterion])

58 if current_evidence_count > 0:

59 self.scores[criterion] = (self.scores[criterion] * current_evidence_count + score) / (current_evidence_count + 1)

60 else:

61 self.scores[criterion] = score

62

63 # Add evidence

64 self.evidence[criterion].append({

65 "response": response,

66 "justification": data["justification"],

67 "strengths": data["strengths"],

68 "weaknesses": data["weaknesses"]

69 })

70

71 def get_final_score(self):

72 """Calculate weighted final score."""

73 weighted_score = 0

74 for criterion, score in self.scores.items():

75 weighted_score += score * self.criteria[criterion]

76

77 return {

78 "total_score": weighted_score,

79 "criterion_scores": self.scores,

80 "evidence": self.evidence

81 }

822. Video Recording and Analysis

Enable recording and analysis of the interview for future review:

1class InterviewRecorder:

2 """Records and analyzes interview sessions."""

3

4 def __init__(self, meeting_id, storage_path="recordings"):

5 self.meeting_id = meeting_id

6 self.storage_path = storage_path

7 self.recording_tasks = {}

8

9 # Ensure storage directory exists

10 os.makedirs(storage_path, exist_ok=True)

11

12 async def start_recording(self, participant_id, stream):

13 """Start recording a participant's audio/video stream."""

14 # Create file paths

15 timestamp = int(time.time())

16 audio_path = f"{self.storage_path}/{self.meeting_id}_{participant_id}_{timestamp}.wav"

17 metadata_path = f"{self.storage_path}/{self.meeting_id}_{participant_id}_{timestamp}.json"

18

19 # Create file writers

20 audio_file = open(audio_path, "wb")

21

22 # Record metadata

23 metadata = {

24 "meeting_id": self.meeting_id,

25 "participant_id": participant_id,

26 "start_time": timestamp,

27 "stream_type": stream.kind

28 }

29

30 with open(metadata_path, "w") as f:

31 json.dump(metadata, f)

32

33 # Start recording task

34 self.recording_tasks[participant_id] = asyncio.create_task(

35 self._record_stream(stream, audio_file, metadata)

36 )

37

38 async def _record_stream(self, stream, audio_file, metadata):

39 """Record audio/video stream to file."""

40 try:

41 while True:

42 frame = await stream.track.recv()

43

44 # For audio streams

45 if stream.kind == "audio":

46 audio_data = frame.to_ndarray()[0]

47 # Convert to appropriate format and write

48 audio_bytes = audio_data.tobytes()

49 audio_file.write(audio_bytes)

50

51 # For video streams (if needed)

52 # This would require additional video processing

53

54 except Exception as e:

55 logging.error(f"Recording error: {str(e)}")

56 finally:

57 audio_file.close()

58

59 async def stop_recording(self, participant_id):

60 """Stop recording a participant's stream."""

61 if participant_id in self.recording_tasks:

62 self.recording_tasks[participant_id].cancel()

63 del self.recording_tasks[participant_id]

64

65 async def analyze_recording(self, recording_path):

66 """Analyze a recorded interview for insights."""

67 # This would implement post-interview analysis

68 # Could use additional ML models for speech patterns, sentiment, etc.

69 pass

703. Integration with Applicant Tracking Systems (ATS)

Connect your AI interviewer with your existing ATS:

1class ATSIntegration:

2 """Integrates with Applicant Tracking Systems."""

3

4 def __init__(self, ats_api_url, api_key):

5 self.ats_api_url = ats_api_url

6 self.api_key = api_key

7 self.headers = {

8 "Authorization": f"Bearer {api_key}",

9 "Content-Type": "application/json"

10 }

11

12 async def update_candidate_status(self, candidate_id, status, notes=None):

13 """Update candidate status in the ATS."""

14 payload = {

15 "status": status,

16 "updated_at": datetime.now().isoformat()

17 }

18

19 if notes:

20 payload["notes"] = notes

21

22 async with httpx.AsyncClient() as client:

23 response = await client.patch(

24 f"{self.ats_api_url}/candidates/{candidate_id}",

25 headers=self.headers,

26 json=payload

27 )

28

29 if response.status_code != 200:

30 logging.error(f"Failed to update candidate status: {response.text}")

31 return False

32

33 return True

34

35 async def upload_interview_results(self, candidate_id, interview_results):

36 """Upload interview results to the ATS."""

37 async with httpx.AsyncClient() as client:

38 response = await client.post(

39 f"{self.ats_api_url}/candidates/{candidate_id}/interviews",

40 headers=self.headers,

41 json=interview_results

42 )

43

44 if response.status_code != 201:

45 logging.error(f"Failed to upload interview results: {response.text}")

46 return False

47

48 return True

49Best Practices for AI Interview Assistants

When developing your AI interview assistant, consider these best practices:

1. Ethical Considerations

- Transparency: Inform candidates they're interacting with an AI

- Data Privacy: Implement strict data protection measures

- Bias Mitigation: Regularly test and adjust your models for bias

- Human Oversight: Include human review for final decisions

2. Technical Optimizations

- Latency Management: Optimize audio processing for natural conversation flow

- Error Handling: Implement robust fallbacks when AI services fail

- Scalability: Design your system to handle concurrent interviews

- Testing: Thoroughly test with diverse candidates and scenarios

3. User Experience

- Clear Instructions: Provide candidates with clear guidance

- Adaptive Pacing: Allow the AI to adjust to the candidate's speaking style

- Natural Transitions: Create smooth topic transitions

- Feedback Mechanisms: Collect and incorporate candidate feedback

Real-World Applications of AI Interview Assistants

Organizations are using AI interview assistants in various ways:

1. Initial Screening

Conduct first-round interviews at scale to identify promising candidates before human interviews.

2. Technical Assessments

Evaluate technical skills through structured coding or knowledge assessments with real-time feedback.

3. Soft Skills Evaluation

Assess communication skills, problem-solving abilities, and cultural fit through conversational interviews.

4. Remote Hiring

Facilitate global hiring by conducting initial interviews across time zones without scheduling constraints.

5. Internal Mobility

Help existing employees explore new roles within the organization through preliminary career conversations.

Conclusion

Building an AI interview assistant with VideoSDK offers a powerful way to transform your hiring process. By combining real-time communication capabilities with sophisticated AI models, you can create intelligent, scalable interview experiences that benefit both recruiters and candidates.

The architecture and code examples in this guide provide a starting point for your implementation. As AI technologies continue to advance, these systems will become increasingly sophisticated, offering even deeper insights and more natural interactions.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ