An AI voice agent is a software application that uses artificial intelligence to conduct human-like conversations through voice. Unlike traditional chatbots that interact via text, voice agents engage with users through spoken language, creating a more natural and accessible experience. In today's digital landscape, these voice agents are revolutionizing customer engagement, streamlining operations, and creating more personalized interactions.

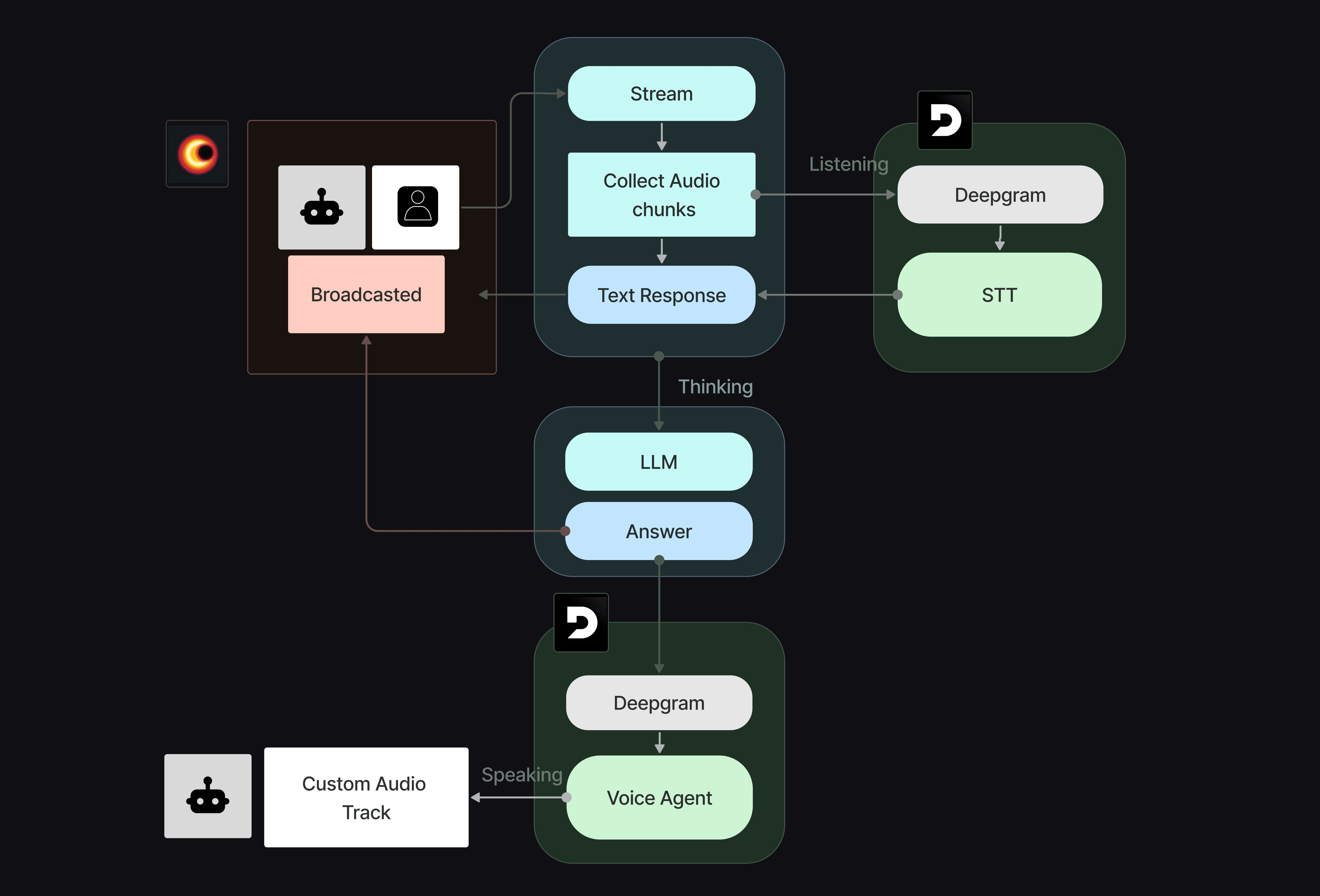

This article explores how you can build powerful AI voice agents using VideoSDK for real-time communication and Deepgram for advanced speech AI capabilities, following the architecture shown in the diagram above.

How Real-Time AI Voice Agents Work

Unlike traditional chatbots that interact via text, voice agents engage with users through spoken language, creating a more natural and accessible experience. Real-time AI voice agents process speech and generate responses with minimal latency, creating fluid conversations that closely mimic human interaction.

As shown in the architecture diagram, an AI voice agent system consists of several interconnected components working together:

- Real-time Audio Streaming (VideoSDK): Handles audio collection and broadcasting between users and the AI

- Speech-to-Text (Deepgram): Converts human speech into text that can be understood by AI

- Natural Language Processing (LLM): Processes the transcribed text and determines the appropriate response

- Text-to-Speech (Deepgram): Converts the AI's text response back into natural-sounding speech

- Custom Audio Track: Delivers the synthesized voice back to the user through the communication platform

Building a truly responsive AI voice agent requires these components to work together with minimal latency, making the choice of underlying technologies critically important.

Introducing VideoSDK and Deepgram

VideoSDK: Powering Real-Time Communications

VideoSDK

is a comprehensive platform for building real-time communication applications. It provides developers with the tools needed to implement high-quality audio and video capabilities in their applications, including:- Low-latency audio and video streaming

- Cross-platform compatibility

- Scalable infrastructure

- Customizable user interfaces

- Simple API integration

For AI voice agents, VideoSDK serves as the communication backbone, handling the real-time audio streaming between users and the AI system.

Deepgram: Advanced Speech AI

Deepgram

is a powerful speech AI platform that provides both speech-to-text (STT) and text-to-speech (TTS) capabilities. Key features include:- High-accuracy speech recognition with Nova-2 model

- Natural-sounding voice synthesis with Aura model

- Real-time transcription with minimal latency

- Support for multiple languages and accents

- Advanced features like speaker diarization and end-of-utterance detection

In our AI voice agent architecture, Deepgram handles the conversion of user speech to text and AI-generated text back to speech, providing the critical first and last steps in the conversation flow.

Building a Real-Time AI Voice Agent: Architecture Overview

Building an effective AI voice agent requires several components working seamlessly together. Let's explore the architecture of our solution:

- Client Interface: A web or mobile application where users interact with the AI agent

- VideoSDK Integration: Manages real-time audio communication

- Deepgram STT: Converts user speech to text

- Intelligence Layer: Processes text and generates appropriate responses using LLMs

- Deepgram TTS: Converts the AI's text responses to speech

- Audio Stream Management: Handles the delivery of the synthesized speech back to the user

This architecture ensures a smooth, real-time conversation flow between the user and the AI agent.

Implementation Guide: Building Your Own AI Voice Agent

Let's dive into the practical implementation of an AI voice agent using VideoSDK and Deepgram, following the architecture depicted in our diagram.

1. Setting Up the Project Structure

Our project consists of several key components that map to different parts of the architecture:

1├── agent/ # Voice agent implementation (center of the diagram)

2│ ├── agent.py # Main agent class for meeting handling

3│ └── audio_stream_track.py # Custom audio track implementation (bottom of diagram)

4├── client/ # React frontend for user interaction (left side of diagram)

5├── intelligence/ # LLM integration for response generation (middle-bottom of diagram)

6├── stt/ # Speech-to-text implementation using Deepgram (top-right of diagram)

7├── tts/ # Text-to-speech implementation using Deepgram (bottom-right of diagram)

8└── main.py # Main application entry point tying everything together

92. Implementing the Client Interface (Left side of diagram)

The client interface is built using React and VideoSDK's React SDK. It provides a user-friendly interface for interacting with the AI agent.

Key components include:

- MeetingProvider: Sets up the VideoSDK connection

- ParticipantCard: Displays video and audio for each participant

- ChatPanel: Shows the conversation history

- MeetingControls: Provides controls for the meeting

Here's how we set up the main application component:

1function App() {

2 const [meetingId, setMeetingId] = React.useState<string | null>(null);

3 const [isLoading, setIsLoading] = React.useState(false);

4 const [useVideo, setUseVideo] = React.useState(false);

5

6 const createMeeting = async () => {

7 try {

8 setIsLoading(true);

9 const response = await fetch("https://api.videosdk.live/v2/rooms", {

10 method: "POST",

11 headers: {

12 Authorization: import.meta.env.VITE_APP_AUTH_TOKEN,

13 "Content-Type": "application/json",

14 },

15 });

16

17 const { roomId } = await response.json();

18 setMeetingId(roomId);

19 } catch (error) {

20 toast.error("Failed to create meeting. Please try again.");

21 console.error("Error creating meeting:", error);

22 } finally {

23 setIsLoading(false);

24 }

25 };

26

27 // Rest of the component implementation...

28}

293. Implementing the AI Agent Components

The AI agent is the core of our system, handling the interaction flow shown in the diagram. Let's break down each component according to the architecture:

Speech-to-Text (STT) with Deepgram (Top-right in diagram)

The

DeepgramSTT class handles the conversion of user speech to text:1class DeepgramSTT(STT):

2 def __init__(

3 self,

4 loop: AbstractEventLoop,

5 api_key,

6 language,

7 intelligence: Intelligence

8 ) -> None:

9 # Initialize Deepgram client

10 self.deepgram_client = DeepgramClient(

11 api_key=api_key,

12 config=DeepgramClientOptions(options={"keepalive": True}),

13 )

14 # Configure STT parameters

15 self.model = "nova-2"

16 self.language = language

17 self.intelligence = intelligence

18 # Other initialization...

19

20 def start(self, peer_id: str, peer_name: str, stream: Stream):

21 # Set up Deepgram connection for a participant

22 deepgram_options = LiveOptions(

23 model=self.model,

24 language=self.language,

25 smart_format=True,

26 encoding="linear16",

27 channels=2,

28 sample_rate=48000,

29 interim_results=True,

30 vad_events=True,

31 # Other options...

32 )

33 # Register event handlers and start connection

34 # ...

35The STT component listens to the audio stream from VideoSDK, transcribes it in real-time, and passes the text to the intelligence layer.

Intelligence Layer (Middle-bottom in diagram, the "Thinking" section)

The intelligence layer (LLM component in the diagram) processes the transcribed text and generates appropriate responses:

1class OpenAIIntelligence(Intelligence):

2 def __init__(self, api_key: str, tts: TTS, base_url: Optional[str]="https://api.openai.com/v1", model: Optional[str] = None, system_prompt: Optional[str] = None):

3 self.client = OpenAI(

4 base_url=base_url,

5 api_key=api_key,

6 max_retries=3,

7 ).chat

8

9 self.tts = tts

10 self.system_prompt = "You are AI Interviewer and you are interviewing a candidate for a software engineering position."

11 self.chat_history = []

12 self.model = model or "gpt-3.5-turbo"

13 self.system_prompt = system_prompt or "You are AI Interviewer and you are interviewing a candidate for a software engineering position."

14

15 def generate(self, text: str, sender_name: str):

16 # Build conversation history

17 messages = self.build_messages(text, sender_name=sender_name)

18

19 # Generate LLM completion

20 response = self.client.completions.create(

21 model=self.model,

22 messages=messages,

23 max_tokens=100,

24 temperature=0.5,

25 stream=False

26 )

27

28 response_text = response.choices[0].message.content

29 # Send response to TTS

30 self.tts.generate(text=response_text)

31

32 # Publish message to the meeting

33 if self.pubsub is not None:

34 self.pubsub(message=f"[Interviewer]: {response_text}")

35

36 # Add response to history

37 self.add_response(response_text)

38This component leverages a large language model (in this case, OpenAI's GPT) to understand user inputs and generate contextually relevant responses.

Text-to-Speech (TTS) with Deepgram (Bottom-right in diagram, the "Speaking" section)

The

DeepgramTTS class converts the generated text responses back into speech, corresponding to the "Voice Agent" component in the diagram:1class DeepgramTTS(TTS):

2 def __init__(self, api_key: str, output_track: MediaStreamTrack):

3 base_url = f"wss://api.deepgram.com/v1/speak?encoding=linear16&sample_rate=24000&&model=aura-stella-en"

4 self.output_track = output_track

5 self.api_key = api_key

6

7 # Initialize WebSocket connection

8 self._socket = connect(

9 base_url, additional_headers={"Authorization": f"Token {self.api_key}"}

10 )

11

12 # Set up receiver thread to handle incoming audio data

13 # ...

14

15 def generate(self, text: Union[str, Iterator[str]]):

16 if self._socket is None:

17 print("WebSocket is not connected.")

18 return

19

20 if isinstance(text, str):

21 print(f"Sending: {text}")

22 self._socket.send(json.dumps({"type": "Speak", "text": text}))

23 elif isinstance(text, Iterator):

24 for t in text:

25 print(f"Sending: {t}")

26 self._socket.send(json.dumps({"type": "Speak", "text": t}))

27The TTS component takes the text generated by the intelligence layer, sends it to Deepgram's TTS API, and forwards the resulting audio to the output track.

Audio Stream Management (Bottom-left in diagram, "Custom Audio Track")

The

CustomAudioStreamTrack class handles the management of audio streams, ensuring that the AI agent's voice is delivered back to the user through VideoSDK as shown at the bottom of the diagram:1class CustomAudioStreamTrack(AudioStreamTrack):

2 def __init__(

3 self, loop, handle_interruption: Optional[bool] = True,

4 ):

5 super().__init__()

6 self.loop = loop

7 # Initialize audio properties

8 self.sample_rate = 24000

9 self.channels = 1

10 self.sample_width = 2

11 self.time_base_fraction = Fraction(1, self.sample_rate)

12 self.samples = int(AUDIO_PTIME * self.sample_rate)

13 self.chunk_size = int(self.samples * self.channels * self.sample_width)

14

15 # Set up frame buffer and processing thread

16 self.frame_buffer = []

17 self.audio_data_buffer = bytearray()

18 self._process_audio_task_queue = asyncio.Queue()

19 self._process_audio_thread = threading.Thread(target=self.process_incoming_audio)

20 self._process_audio_thread.daemon = True

21 self._process_audio_thread.start()

22

23 def add_new_bytes(self, bytes: Iterator[bytes]):

24 self._process_audio_task_queue.put_nowait(bytes)

25

26 async def recv(self) -> AudioFrame:

27 # Implement audio frame delivery for VideoSDK

28 # ...

29This component ensures that the audio generated by the TTS is properly formatted and delivered to the VideoSDK stream.

4. Tying It All Together

The

main.py file orchestrates all the components shown in the diagram, creating the complete flow from audio collection to response generation and delivery:1async def run():

2 try:

3 print("Loading Interviewer...")

4 # Create audio track

5 audio_track = CustomAudioStreamTrack(

6 loop=loop,

7 handle_interruption=True,

8 )

9

10 # Set up TTS client

11 tts_client = DeepgramTTS(

12 api_key=stt_api_key,

13 output_track=audio_track,

14 )

15

16 # Set up intelligence client

17 intelligence_client = OpenAIIntelligence(

18 api_key = llm_api_key,

19 model="gpt-4o",

20 tts=tts_client,

21 system_prompt=(

22 "You are an AI Copilot in a meeting. Keep responses short and engaging. "

23 "Start by introducing yourself: 'Hi, I'm your AI Copilot!'. "

24 "If asked, explain how you work briefly: 'I process speech using Deepgram STT and reply using OpenAI LLM.' "

25 "Keep the conversation interactive and avoid long responses unless explicitly requested."

26 )

27 )

28

29 # Set up STT client

30 stt_client = DeepgramSTT(

31 loop=loop,

32 api_key=stt_api_key,

33 language=language,

34 intelligence=intelligence_client

35 )

36

37 # Create and join the meeting

38 interviewer = AIInterviewer(loop=loop, audio_track=audio_track, stt=stt_client, intelligence=intelligence_client)

39 await interviewer.join(meeting_id=room_id, token=auth_token)

40

41 except Exception as e:

42 traceback.print_exc()

43 print("error while joining", e)

44Use Cases for Real-Time AI Voice Agents

Now that we understand how the components work together in the architecture diagram, let's explore how this technology can be applied. The combination of VideoSDK and Deepgram enables the creation of powerful AI voice agents suitable for various applications:

1. Virtual Interviews and Recruitment

AI voice agents can conduct initial screening interviews, ask standardized questions, and evaluate candidate responses, freeing up human recruiters for more complex assessments.

2. Customer Support

AI voice agents can handle common customer inquiries, troubleshoot basic issues, and escalate complex problems to human agents, providing 24/7 support without increasing staffing costs.

3. Virtual Assistants for Meetings

As demonstrated in our example, AI agents can participate in meetings as virtual assistants, taking notes, answering questions, and providing relevant information in real-time.

4. Healthcare Applications

AI voice agents can conduct preliminary patient assessments, monitor ongoing health conditions, and provide medication reminders, improving healthcare accessibility.

5. Education and Training

AI tutors can provide personalized learning experiences, answer student questions, and conduct practice sessions, making education more accessible and adaptive.

Best Practices for Building Effective AI Voice Agents

Based on our implementation experience with the architecture shown in the diagram, here are some best practices to consider for each component:

1. Prioritize Low Latency

Real-time conversation requires minimal latency. Optimize your implementation to reduce delays at every stage of the processing pipeline shown in the diagram, from audio collection to speech synthesis.

2. Implement Interruption Handling

Natural conversations often involve interruptions. Ensure your agent can gracefully handle being interrupted by implementing proper audio buffering and management.

3. Provide Clear Feedback

Users should always know whether the AI agent is listening, processing, or speaking. Implement clear visual and auditory cues to indicate the agent's state.

4. Use Appropriate Voice Models

Choose voice models that match your agent's persona and use case. Deepgram's Aura models provide natural-sounding voices that enhance the user experience.

5. Design for Conversation Flow

Craft your system prompts to guide the AI toward conversational responses rather than long monologues. Consider the unique constraints of spoken conversation versus written text.

Conclusion

Real-time AI voice agents represent a powerful frontier in human-computer interaction. By combining VideoSDK's real-time communication capabilities with Deepgram's advanced speech AI in the architecture we've explored, developers can create agents that engage users in natural, helpful conversations across a wide range of applications.

The implementation we've walked through provides a foundation for building your own AI voice agents, with each component in the diagram representing a critical part of the system that you can customize and extend to suit your specific use case. As speech AI and real-time communication technologies continue to advance, we can expect AI voice agents to become increasingly capable, natural, and valuable in our daily lives.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ