Introduction to OpenAI Speech-to-Text

OpenAI Speech-to-Text represents a significant advancement in automatic speech recognition (ASR) technology. This service allows developers to seamlessly convert audio input into accurate and coherent text. Leveraging the power of machine learning, OpenAI has created a robust and versatile solution applicable to a wide range of use cases.

What is OpenAI Speech-to-Text?

At its core, OpenAI Speech-to-Text is an API service that takes audio as input and returns its textual representation. The service simplifies the complexities of speech recognition, allowing developers to focus on integrating this functionality into their applications. Whether you need to transcribe meetings, create accessible content, or develop voice-controlled interfaces, OpenAI's service offers a powerful and efficient solution. It allows both real-time and offline speech-to-text transcription, providing flexibility for diverse application requirements.

The Power of Whisper: OpenAI's Speech Recognition Model

OpenAI's Speech-to-Text service is powered by Whisper, a state-of-the-art neural network trained on a massive dataset of diverse audio and text. This extensive training enables Whisper to achieve impressive accuracy across a wide range of accents, languages, and environmental conditions. Unlike previous models, Whisper demonstrates remarkable robustness to noise and variations in speech patterns, making it a reliable choice for real-world applications. Whisper provides near-human level automatic speech recognition (ASR).

Key Features and Benefits of OpenAI's Speech-to-Text Solution

- High Accuracy: Whisper's advanced architecture and training data result in highly accurate transcriptions.

- Multilingual Support: Supports multiple languages, enabling global accessibility.

- Ease of Use: The OpenAI API simplifies integration into various applications.

- Real-time and Offline Processing: Supports both real-time and batch processing of audio data.

- Customizable: Offers options for customization to fine-tune performance for specific use cases.

Understanding the OpenAI Whisper Model

The Whisper model is the engine that drives OpenAI's speech-to-text capabilities. Understanding its architecture and training is crucial for appreciating its strengths and limitations.

Architecture and Functionality of the Whisper Model

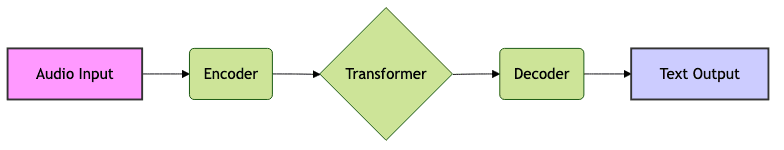

Whisper is a transformer-based encoder-decoder model. The encoder processes the audio input, extracting relevant features. The decoder then generates the corresponding text, leveraging the encoded information. This architecture allows Whisper to capture complex relationships between audio and text, resulting in more accurate transcriptions. Whisper is able to perform multilingual speech recognition, speech translation, and language identification. A simplified visualization of how Whisper works is as follows:

Training Data and Model Robustness

One of the key factors contributing to Whisper's success is the vast amount of training data it was exposed to. OpenAI trained Whisper on 680,000 hours of multilingual and multitask supervised data collected from the web. This dataset includes a wide variety of accents, languages, background noises, and technical jargon, enabling Whisper to generalize well to diverse real-world scenarios. The training data covers a diverse range of acoustic environments and recording qualities, ensuring robustness to challenging conditions. This extensive dataset and the Whisper model accuracy makes Whisper a powerful tool.

Whisper's Multilingual Capabilities and Accuracy

Whisper's ability to handle multiple languages sets it apart from many other speech-to-text services. It supports transcription and translation in a wide array of languages, making it a valuable tool for global applications. While its accuracy is generally high, performance may vary depending on the specific language and accent. However, ongoing improvements and fine-tuning are continuously enhancing its multilingual capabilities. The multilingual speech recognition is facilitated through its large multilingual training dataset.

Working Example

This project integrates VideoSDK, OpenAI Realtime APIs to create an AI Translator Agent. Below are the setup instructions.

<iframe

1src="https://www.youtube.com/embed/aKIkGsKc1fc?rel=0"

2style="

3 position: absolute;

4 top: 0;

5 left: 0;

6 width: 100%;

7 height: 100%;

8 border: 0;

9"

10allowfullscreen

11frameborder="0"</iframe> </div>

Accessing OpenAI Speech-to-Text: API and Libraries

To leverage OpenAI Speech-to-Text, you'll need to use the OpenAI API. Here's a guide on getting started and using the available libraries.

Getting Started with the OpenAI API

- Create an OpenAI Account: If you don't already have one, sign up for an account on the OpenAI website.

- Obtain an API Key: Generate an API key from your OpenAI account dashboard. This key will be used to authenticate your requests to the API.

- Install the OpenAI Library: Use pip to install the OpenAI Python library:

pip install openai. - Authenticate Your Requests: Include your API key in the header of your API requests or set it as an environment variable.

Using the OpenAI Python Library: Code Examples

Here's a simple example of how to use the OpenAI Python library to transcribe an audio file:

python

1import openai

2

3openai.api_key = "YOUR_API_KEY" # Replace with your actual API key

4

5def transcribe_audio(audio_file_path):

6 try:

7 with open(audio_file_path, "rb") as audio_file:

8 transcript = openai.Audio.transcribe(

9 model="whisper-1",

10 file=audio_file

11 )

12 return transcript["text"]

13 except Exception as e:

14 print(f"Error during transcription: {e}")

15 return None

16

17# Example usage

18audio_file = "path/to/your/audio.mp3" # Replace with the path to your audio file

19transcription = transcribe_audio(audio_file)

20

21if transcription:

22 print(f"Transcription: {transcription}")

23Integrating with Other Programming Languages: Node.js and JavaScript Examples

For Node.js, you can use the

openai package. First, install it using npm: npm install openaiHere's an example:

javascript

1const OpenAI = require('openai');

2const fs = require('fs');

3

4const openai = new OpenAI({

5 apiKey: 'YOUR_API_KEY', // Replace with your actual API key

6});

7

8async function transcribeAudio(audioFilePath) {

9 try {

10 const transcription = await openai.audio.transcriptions.create({

11 file: fs.createReadStream(audioFilePath),

12 model: "whisper-1",

13 });

14

15 return transcription.text;

16 } catch (error) {

17 console.error("Error transcribing audio:", error);

18 return null;

19 }

20}

21

22// Example Usage:

23const audioFile = "path/to/your/audio.mp3"; // Replace with the path to your audio file

24

25transcribeAudio(audioFile)

26 .then(transcription => {

27 if (transcription) {

28 console.log("Transcription:", transcription);

29 }

30 });

31OpenAI Speech-to-Text: Applications and Use Cases

The versatility of OpenAI Speech-to-Text makes it suitable for a wide variety of applications.

Transcription Services and Accessibility

One of the most straightforward applications is in transcription services. OpenAI Speech-to-Text can automatically transcribe audio files, saving time and effort compared to manual transcription. This is invaluable for creating transcripts of meetings, lectures, interviews, and other audio recordings. Furthermore, it greatly improves accessibility for individuals who are deaf or hard of hearing, by providing real-time or post-event captions for video and audio content. This enables broader audience reach and inclusivity.

Dictation Software and Productivity Tools

OpenAI Speech-to-Text can also be integrated into dictation software, allowing users to input text using their voice. This can significantly improve productivity for writers, journalists, and anyone who frequently creates written content. Integrating it with note-taking apps enables hands-free note-taking. Automatic speech recognition (ASR) makes dictation software more efficient.

Real-time Applications and Voice Assistants

The real-time capabilities of OpenAI Speech-to-Text make it ideal for voice assistants and interactive applications. It can be used to power voice-controlled interfaces, enabling users to interact with software and devices using their voice. Examples include voice-activated search, command execution, and automated customer service. The real-time speech-to-text OpenAI provides makes it perfect for interactive applications.

Limitations and Considerations of OpenAI Speech-to-Text

While OpenAI Speech-to-Text offers numerous benefits, it's important to be aware of its limitations.

Accuracy Limitations and Error Handling

Although Whisper is highly accurate, it's not perfect. Accuracy can be affected by factors such as background noise, accents, and speech impediments. It's important to implement error handling mechanisms to gracefully handle transcription errors. Consider using post-processing techniques to correct common errors and improve overall accuracy. For handling errors, refer to OpenAI speech-to-text error handling documentation.

Pricing and Cost Optimization Strategies

OpenAI Speech-to-Text is a paid service, and the cost can vary depending on the amount of audio processed. Understanding the pricing model and implementing cost optimization strategies is crucial for managing expenses. Some strategies include optimizing audio quality to reduce processing time, using the appropriate model size based on the accuracy needed, and chunking long audio files into smaller segments. The OpenAI speech-to-text pricing structure is available in their API documentation. For OpenAI speech-to-text cost optimization, consider using smaller models.

Ethical Considerations and Data Privacy

When using OpenAI Speech-to-Text, it's important to consider ethical implications and data privacy. Ensure that you have the necessary permissions to record and transcribe audio data. Implement appropriate security measures to protect sensitive information. Be transparent with users about how their audio data is being used. Adhering to privacy regulations is crucial.

OpenAI Speech-to-Text vs. Competitors

OpenAI Speech-to-Text is not the only speech-to-text service available. Let's compare it to one of its main competitors, Google Cloud Speech-to-Text.

Comparing OpenAI Whisper to Google Cloud Speech-to-Text

Both OpenAI Whisper and Google Cloud Speech-to-Text offer robust speech recognition capabilities. However, they differ in certain aspects. Whisper stands out with its multilingual support and impressive accuracy, especially in challenging audio environments. Google Cloud Speech-to-Text offers extensive customization options and integration with other Google Cloud services. The choice between the two depends on the specific requirements of your application. Both are among the best speech-to-text API providers.

Benchmarking Performance and Feature Comparison

| Feature | OpenAI Whisper | Google Cloud Speech-to-Text |

|---|---|---|

| Accuracy | High, particularly in noisy environments | High, with customization options |

| Multilingual | Excellent multilingual support | Good multilingual support |

| Customization | Limited customization options | Extensive customization options |

| Integration | OpenAI API | Google Cloud Platform integration |

| Pricing | Pay-per-use, based on audio duration | Pay-per-use, with free tier |

| Real-time | Yes | Yes |

| Offline | Yes | Yes |

Advanced Techniques and Best Practices

To maximize the performance of OpenAI Speech-to-Text, consider the following techniques.

Optimizing Audio for Better Transcription Results

Ensure that audio input is of high quality by reducing background noise and optimizing recording levels. Use appropriate microphones and recording environments. Preprocessing audio using noise reduction algorithms can significantly improve transcription accuracy.

Handling Long Audio Files and Chunks

For long audio files, break them into smaller chunks to improve processing efficiency and reduce the risk of errors. Use appropriate chunking strategies to maintain context and coherence. The OpenAI Whisper API has a limitation on the audio file size.

Improving Accuracy Through Preprocessing and Postprocessing

Implement preprocessing techniques such as noise reduction and audio normalization to improve audio quality. Use postprocessing techniques such as spell checking and grammar correction to refine transcription results. Optimizing audio quality is key to better results.

Conclusion: The Future of OpenAI Speech-to-Text

OpenAI Speech-to-Text, powered by the Whisper model, is transforming the landscape of speech recognition. Its accuracy, multilingual capabilities, and ease of use make it a valuable tool for developers across various industries. As the technology continues to evolve, we can expect even greater advancements in accuracy, efficiency, and functionality. The OpenAI speech-to-text model is rapidly evolving.

Resources:

OpenAI Whisper GitHub Repository

: "Learn more about the Whisper model's architecture and code."OpenAI API Documentation

: "Explore the OpenAI API documentation for detailed information on using the speech-to-text functionality."OpenAI Blog

: "Stay updated on the latest advancements and news from OpenAI."

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ