Introduction to Conversational AI API

Conversational AI APIs are revolutionizing how we interact with digital platforms. At their core, these APIs provide programmatic access to advanced artificial intelligence models capable of understanding and generating human-like conversations. They power modern chatbots, virtual assistants, and even 3D AI characters across websites, mobile apps, and games.

Their significance extends beyond simple text chat—they enable real-time, intelligent, and context-aware communication, making digital experiences far more engaging and efficient. Developers can rapidly integrate these capabilities, transforming customer support, gaming, education, and more. As AI models such as GPT-4 and other large language models become more accessible, the usage of conversational AI APIs has exploded across industries.

From powering interactive NPCs in gaming to automating website chat support, conversational AI APIs are at the heart of today’s intelligent digital interfaces.

What is a Conversational AI API?

A conversational AI API is a cloud-based interface that allows developers to embed AI-powered conversational abilities into their software. The core features typically include:

- Text Understanding and Generation: The API processes user inputs, infers intent, and generates human-like responses.

- Voice Capabilities: Many APIs support speech-to-text and text-to-speech, enabling natural voice interactions.

- Spatial Triggers and Multimodal Inputs: Advanced APIs can handle spatial contexts (e.g., in AR/VR), images, or even actions within a 3D environment.

Unlike traditional rule-based chatbots, conversational AI APIs harness large language models and neural networks. This enables:

- Contextual understanding, not just keyword matching

- Dynamic, open-ended conversations

- Adaptation to user intent and tone

For example, integrating a conversational AI API into your app can elevate simple chatbots into sophisticated virtual agents capable of nuanced, multi-turn dialogues. APIs like OpenAI’s GPT-4 or Convai allow seamless ai chatbot integration and provide access to large language models api endpoints for developers to build next-generation conversational systems.

Key Benefits of Using a Conversational AI API

Leveraging a conversational AI API brings transformative benefits to developers and businesses:

- Real-Time AI Communication: APIs process and respond to user inputs within milliseconds, enabling natural, real-time interaction across platforms.

- Scalability and Flexibility: Cloud-based APIs scale with your user base, handling millions of conversations simultaneously without infrastructure headaches.

- Platform-Agnostic Embedding: Easily embed AI-powered chat, voice, or 3D AI character capabilities in websites, mobile apps, or even game engines like Unity and Unreal.

- Enhanced Engagement: Real-time AI communication fosters deeper engagement, whether it’s for ai for gaming (NPCs, interactive storylines) or ai for websites (virtual shopping assistants).

- Continuous Improvement: Leading APIs frequently update underlying models, giving you access to the latest advancements in AI with minimal effort.

By adopting a conversational ai api, you open the door to innovative features like spatial intelligence and the deployment of lifelike 3D ai characters, making your user experiences more interactive and memorable.

Popular Conversational AI API Providers

The conversational AI landscape is rich with providers, each offering unique features and pricing models. Here’s a look at some of the leading options:

- OpenAI (ChatGPT, GPT-4 API): Industry leader with robust large language models, supporting both text and voice. Rich API documentation and strong developer support.

- Convai: Specializes in 3D AI characters and spatial intelligence, ideal for ai for gaming and immersive experiences.

- ConvoAI: Focuses on real-time, low-latency conversational AI for websites and apps, with customizable endpoints.

- Google Dialogflow: Popular for voice and text chatbots with strong NLU (Natural Language Understanding).

- Microsoft Azure Bot Service: Enterprise-grade, integrates with Azure infrastructure and supports omnichannel deployment.

- Rasa: Open-source option for custom conversational AI deployments on-premise or cloud.

Comparison Table

| Provider | Key Strength | Multimodal | 3D AI/Spatial | Pricing | OpenAI Alternative |

|---|---|---|---|---|---|

| OpenAI GPT-4 | Large language model | Text/Voice | No | Usage-based | - |

| Convai | 3D AI, gaming | Yes | Yes | Freemium | Yes |

| ConvoAI | Real-time, websites | Text/Voice | No | Credits | Yes |

| Dialogflow | NLU, integrations | Yes | No | Usage-based | Yes |

| Rasa | Open-source/custom | Text | No | Free | Yes |

When selecting a provider, compare their ai model access api offerings, documentation, uptime guarantees, and pricing to find the best fit for your use case.

How Conversational AI APIs Work

Technical Architecture Overview

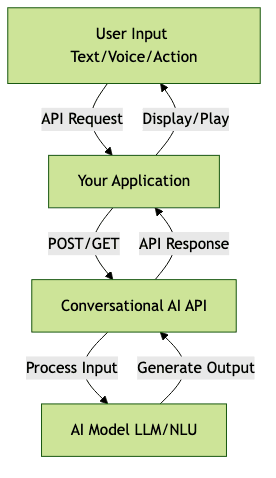

Conversational AI APIs are typically delivered as cloud-based RESTful services. Here’s a high-level architecture:

API Endpoints

Most APIs provide endpoints for:

- Input: Send text, audio, or other data.

- Output: Receive generated response, audio stream, or action commands.

- Additional: Some support vision (image input), or spatial triggers for 3D environments.

Authentication and Security

APIs require authentication—typically via API keys, OAuth tokens, or JWTs. Secure communication (HTTPS) and rate limiting protect both user data and infrastructure. Providers often support fine-grained permissions and audit logs.

Code Snippet: Simple API Integration (Python)

1import requests

2

3api_url = \"https://api.openai.com/v1/chat/completions\"

4headers = {\n \"Authorization\": \"Bearer YOUR_API_KEY\",\n \"Content-Type\": \"application/json\"\n}

5payload = {\n \"model\": \"gpt-4\",\n \"messages\": [{\"role\": \"user\", \"content\": \"Hello!\"}]\n}

6response = requests.post(api_url, headers=headers, json=payload)

7print(response.json())

8This example demonstrates sending a text message to a conversational AI API and printing the response.

Implementation Guide: Building with a Conversational AI API

Step-by-Step Setup

- Register for an Account: Sign up on the provider’s platform.

- Generate API Keys: Obtain secure credentials for authentication.

- Review API Documentation: Explore endpoints, parameters, and SDKs.

- Test Endpoints: Use curl, Postman, or SDKs to make sample requests.

- Integrate with Your App: Embed API calls in your codebase.

Embedding in Different Platforms

- Web: Use JavaScript/TypeScript SDKs or direct HTTP calls to integrate chatbots or voice agents.

- Mobile: Leverage native SDKs (iOS, Android) for chat, voice, or AR/VR agents.

- Game Engines: Convai and similar APIs provide Unity/Unreal plugins for integrating AI-driven NPCs and 3D characters.

Sample Use Cases

- Customer Support Chatbots: Automate help desks and FAQs.

- Virtual Assistants: Schedule meetings, answer queries.

- In-Game NPCs: Create lifelike, interactive characters with real-time dialogue.

Code Snippet: Sending a Message to the API (JavaScript)

1const fetch = require(\"node-fetch\");

2const apiUrl = \"https://api.convai.com/v1/message\";

3const headers = {\n \"Authorization\": \"Bearer YOUR_API_KEY\",\n \"Content-Type\": \"application/json\"\n};

4const payload = {\n \"session_id\": \"SESSION123\",\n \"message\": \"How can I help you today?\"\n};

5fetch(apiUrl, {\n method: \"POST\",\n headers: headers,\n body: JSON.stringify(payload)\n})

6 .then(res => res.json())

7 .then(data => console.log(data))

8 .catch(err => console.error(err));

9This demonstrates a basic message exchange with a conversational ai api provider.

Pricing and Support Considerations

Conversational AI API pricing varies by provider and usage. Common models include:

- Subscription: Monthly/annual plans with volume tiers.

- Pay-as-you-go/Credits: Charges per message, character, or API call.

- Freemium: Limited free tier, paid upgrades for scale.

Review each provider’s ai api pricing details to avoid unexpected costs. Leading platforms offer extensive developer support, including thorough api documentation, sample code, and active communities where developers can share tips and troubleshoot issues.

Best Practices for Deploying Conversational AI APIs

- Prioritize Security & Privacy: Always use HTTPS, store API keys securely, and comply with data protection regulations.

- Optimize Performance: Cache responses where possible, handle timeouts, and monitor latency to ensure a smooth user experience.

- Focus on UX: Design clear, fallback flows for edge cases. Test conversation logic with real users to refine the AI’s responses and improve engagement.

Future Trends in Conversational AI APIs

The future of conversational AI APIs is being shaped by:

- Spatial Intelligence & 3D Characters: APIs like Convai are pushing the envelope, enabling AI agents that interact contextually within 3D spaces and games.

- Multimodal & Personalized Interactions: Expect APIs to support more modalities (voice, vision, emotion) and greater personalization, adapting conversations to individual users and contexts.

With advancements in large language models, spatial AI, and multimodal fusion, the next generation of conversational AI APIs will power smarter, more immersive digital experiences.

Conclusion

Conversational AI APIs enable developers to rapidly build intelligent, interactive agents for any digital platform. Explore the providers and best practices above to unlock new possibilities in user engagement and automation.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ