What is WebRTC?

WebRTC

, short for Web Real-Time Communication, has revolutionized the way we think about communication over the web and mobile devices. WebRTC is an open-source project that enables web browsers and mobile applications to communicate in real-time via simple APIs. It supports video, audio, and generic data to be sent between peers, making it a potent tool for developers looking to implement real-time communication features. Originally designed to facilitate browser-to-browser applications for voice calling, video chat, and P2P file sharing without the need for internal plugins, WebRTC extends its capabilities to native apps, particularly on platforms like iOS.WebRTC's Components

The technology is built on several components, with the most critical being:

- MediaStream (getUserMedia): Captures audio and video media.

- RTCPeerConnection: Enables audio or video data transfer with control over the connection's fine details.

- RTCDataChannel: Allows bidirectional data transfer between peers.

These components work together to enable direct, peer-to-peer communication, bypassing intermediaries and minimizing delays and bandwidth usage.

Why WebRTC on iOS?

For iOS developers, WebRTC offers compelling advantages:

- Cross-platform compatibility: WebRTC is supported across all major browsers and platforms, including iOS, which allows developers to implement a consistent communication experience regardless of the user's device.

- Real-time performance: With minimal latency, WebRTC is ideal for real-time applications like video conferencing,

live streaming

, and gaming. - Encryption and security: WebRTC inherently supports secure communication channels, using protocols such as DTLS (Datagram Transport Layer Security) and SRTP (Secure Real-time Transport Protocol) to protect data integrity and privacy.

Supported iOS Platforms

Apple has embraced WebRTC, providing robust support in Safari and through native libraries for iOS apps. This support empowers developers to integrate real-time communication features directly into iOS applications, extending the functionality to mobile devices.

The next section will guide you through setting up your development environment to start building WebRTC applications on iOS. Stay tuned as we dive into the specifics of configuring Xcode, handling dependencies, and preparing your device for development.

Setting up the Development Environment for WebRTC on iOS

Before diving into the code, it's crucial to set up a proper development environment. This setup will ensure that you have all the necessary tools and frameworks to build a WebRTC application on iOS. Here’s a step-by-step guide to get you started.

Let`s Start to Build iOS WebRTC Application with Swift

Prerequisites

To begin development, you'll need the following:

- Xcode: The latest version of Xcode, which includes all the necessary compilers and tools. It's available for free on the Mac App Store.

- iOS SDK: Included with Xcode and updated regularly, it provides the necessary APIs to interact with iOS hardware and software.

- Node.js and npm: These are essential for running JavaScript on the server, which is crucial for WebRTC signaling. Download them from

nodejs.org

.

Setting up WebRTC Libraries

WebRTC is not included by default in iOS development tools, so you'll need to integrate it manually. There are two main methods to do this: using

CocoaPods

or manually integrating the frameworks.Using CocoaPods:

CocoaPods is a dependency manager for Swift and Objective-C Cocoa projects. It has thousands of libraries and can help you scale your projects elegantly. Here’s how to set it up:

- Install CocoaPods if you haven't already: Open Terminal and run

sudo gem install cocoapods. - Navigate to your project directory in Terminal and run

pod initto create a Podfile. - Open the Podfile and add

pod 'WebRTC'under your target. This tells CocoaPods which libraries to install. - Save the file and run

pod installto integrate WebRTC into your project.

Manually Integrating WebRTC Frameworks:

If you prefer not to use CocoaPods, you can manually integrate the WebRTC frameworks into your project.

- Download the latest WebRTC frameworks from

WebRTC.org

. - Drag and drop the WebRTC frameworks into your Xcode project.

- Ensure that the frameworks are correctly linked in your project settings under "General" and "Frameworks, Libraries, and Embedded Content".

Configuring App Permissions

WebRTC needs permission to use the camera and microphone. You must configure these in your app's Info.plist:

Add

NSCameraUsageDescription and NSMicrophoneUsageDescription keys with strings describing why the app needs these permissions.Testing the Environment

After setting up, create a simple test to verify that everything is working:

- Build a basic UI with a button to start a WebRTC session.

- Use logging to confirm that the app can access the camera and microphone.

This setup is the foundation of your WebRTC application on iOS. With the environment ready, you can start building more complex features and dive into real-time communication functionalities.

The next section will guide you through creating a simple WebRTC application, from handling peer connections to streaming video and audio.

Initializing the WebRTC Components

First, you'll need to initialize the primary WebRTC components within your iOS application.

RTCPeerConnection:: This is the heart of WebRTC and manages the connection between your device and the remote peer.

swift

1var peerConnection: RTCPeerConnection?

2let configuration = RTCConfiguration()

3configuration.iceServers = [RTCIceServer(urlStrings: ["stun:stun.l.google.com:19302"])]

4 let constraints = RTCMediaConstraints(mandatoryConstraints: nil,

5 optionalConstraints: nil)

6peerConnection = peerConnectionFactory.peerConnection(with: configuration,

7 constraints: constraints,

8 delegate: nil)

9MediaStream (getUserMedia): This API captures audio and video media. You'll need to request access to the device's camera and microphone, then create media streams based on this access.

swift

1func startCaptureLocalMedia() {

2 let mediaConstraints = RTCMediaConstraints(mandatoryConstraints: nil, optionalConstraints: nil)

3 peerConnectionFactory.videoSource().captureDevice = AVCaptureDevice.default(for: .video)

4 let localVideoTrack = peerConnectionFactory.videoTrack(with: videoSource, trackId: "ARDAMSv0")

5 let localStream = peerConnectionFactory.mediaStream(withStreamId: "ARDAMS")

6 localStream.addVideoTrack(localVideoTrack)

7 peerConnection?.add(localStream)

8}

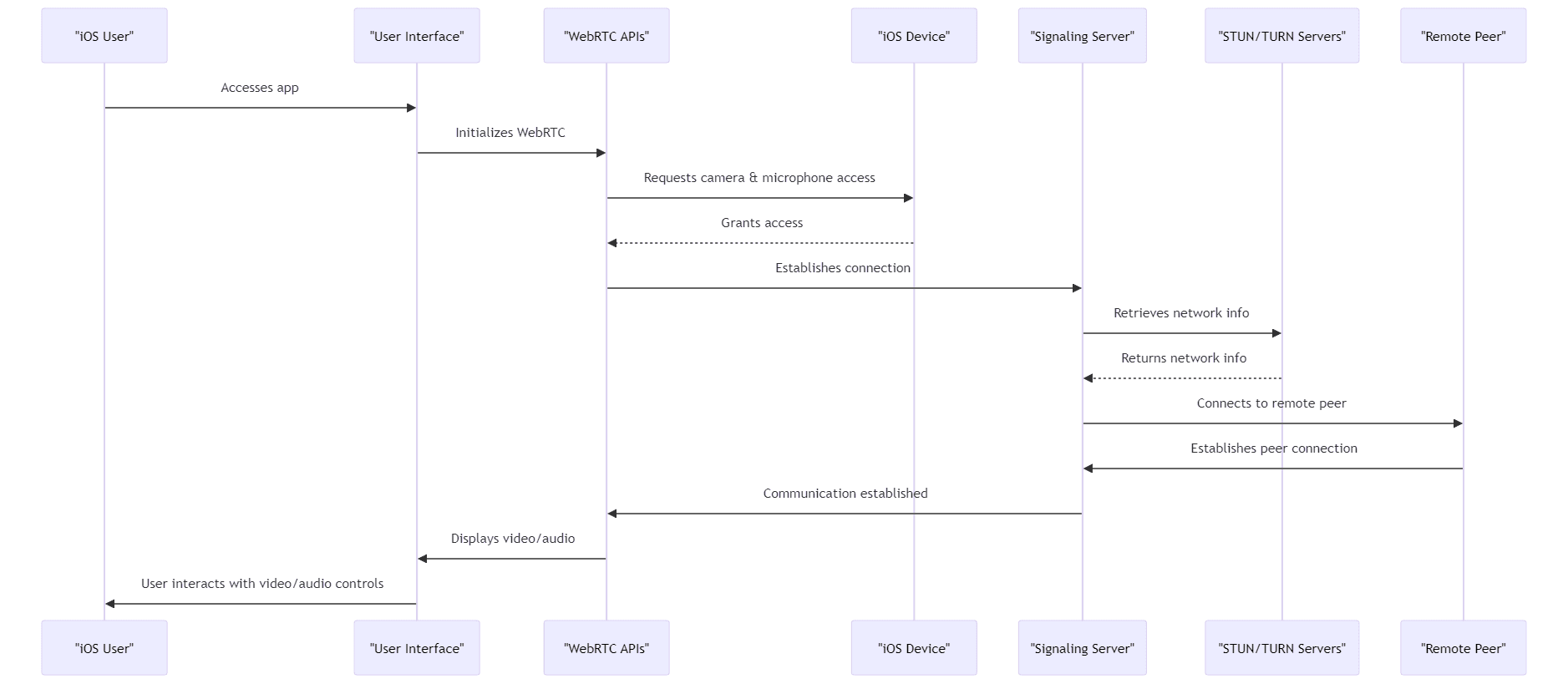

9Establishing a Connection

To establish a connection, you must configure signaling to exchange connection data between peers.

Signaling: Before peers can connect directly, they must exchange information like IP addresses and media metadata through a signaling process. This can be done using a simple WebSocket server or any server-side technology that can handle HTTP requests.

swift

1func signalingSetup() {

2 let socket = WebSocket(url: URL(string: "wss://your-signaling-server-url")!)

3 socket.connect()

4 socket.onText = { text in

5 // Handle incoming signaling messages

6 }

7 }

8Handling Offer and Answer

The connection is established through an offer and answer model, where one peer sends an offer to start a connection, and the other sends an answer in response.

Creating an Offer:

swift

1peerConnection?.offer(for: RTCMediaConstraints(mandatoryConstraints: nil, optionalConstraints: nil), completionHandler: { (sdp, error) in

2 guard let sdp = sdp else {

3 print("Error creating offer: \\(error)")

4 return

5 }

6 self.peerConnection?.setLocalDescription(sdp, completionHandler: { (error) in

7 // Handle error

8 })

9 // Send the offer to the remote peer via your signaling channel

10})

11Receiving an Answer:

swift

1 func handleRemoteDescriptionReceived(_ sdp: RTCSessionDescription) {

2 peerConnection?.setRemoteDescription(sdp, completionHandler: { (error) in

3 // Handle error

4 })

5}

6Adding UI Components

Finally, integrate UI components to handle the interaction:

- Create buttons for "Call", "Hang up", and "Switch camera".

- Add video views to display local and remote video streams.

swift

1func setupVideoViews() {

2 localVideoView = RTCEAGLVideoView(frame: localFrame)

3 remoteVideoView = RTCEAGLVideoView(frame: remoteFrame)

4 view.addSubview(localVideoView)

5 view.addSubview(remoteVideoView)

6}

7In the next section, we will delve into more advanced features and customization options to enhance your WebRTC application further.

Advanced Features and Customization in WebRTC iOS

After establishing the basics of a WebRTC application for iOS, it's time to expand its functionality and enhance user experience with advanced features and customization options. This section will explore how to integrate additional capabilities such as screen sharing, data channels, and UI/UX enhancements that cater to more complex application requirements.

Implementing Screen Sharing on WebRTC iOS App

Screen sharing in ios app

is a powerful feature in collaborative and educational applications, allowing users to share their screen contents with peers.Using ReplayKit for Screen Capture:

ReplayKit by Apple provides an efficient way to capture screen data on iOS devices. You can capture the screen and stream it to other peers using WebRTC.

swift

1import ReplayKit

2let screenRecorder = RPScreenRecorder.shared()

3screenRecorder.startCapture { (sampleBuffer, bufferType, error) in

4 // Handle the captured screen sampleBuffer

5 } completionHandler: { error in

6 if let error = error {

7 print("Screen capture error: \\(error)")

8 }

9}

10Transmitting the Screen Capture over WebRTC:

Once captured, the video data needs to be transmitted using WebRTC. This involves converting the sample buffer into a format suitable for transmission over the network.

Utilizing Data Channels on WebRTC iOS

Data channels enable the transmission of arbitrary data such as text, files, or even binary data in real-time between peers.

Setting up a Data Channel:Data channels are established alongside the media stream but are independent of it, allowing data exchange even in audio-only or video-only sessions.

swift

1let dataChannelConfig = RTCDataChannelConfiguration()

2dataChannelConfig.isOrdered = true

3let dataChannel = peerConnection?.dataChannel(forLabel: "myDataChannel", configuration: dataChannelConfig)

4Handling Data Messages:

Sending and receiving data through the data channel involves handling events for open, message, and close states.

swift

1dataChannel?.sendData(RTCDataBuffer(data: myData, isBinary: true))

2dataChannel?.registerObserver(self)

3func dataChannel(_ dataChannel: RTCDataChannel, didReceiveMessageWith buffer: RTCDataBuffer) {

4 // Process received data

5}

6Enhancing UI/UX

A polished UI/UX is crucial for ensuring user engagement and satisfaction. Consider the following enhancements:

- Custom Video Layouts: Designing responsive and adaptive video layouts that adjust to various device orientations and screen sizes enhances the user experience.

- Interactive Controls: Adding controls such as mute, pause, and camera switch can significantly improve the usability of your application. Gesture recognizers can be used to add touch interactions like pinch to zoom or swipe to switch cameras.

- Feedback and Notifications: Providing visual and haptic feedback for actions like connection status or message receipt can make the app more intuitive and user-friendly.

Testing and Optimization

Finally, thorough testing and optimization are essential to ensure the reliability and efficiency of your application.

- Network Conditions Simulation: Test your application under various network conditions to ensure stable performance across different bandwidths and latencies.

- Performance Profiling: Use tools like Instruments in Xcode to identify and optimize performance bottlenecks, particularly in video processing and data transmission.

These enhancements not only improve the functionality but also ensure that the app remains competitive and relevant in the fast-evolving landscape of mobile applications.

Integrating Signaling and Handling NAT Traversal in WebRTC for iOS

For a WebRTC application to function optimally on iOS, it must effectively manage signaling and Network Address Translation (NAT) traversal. This section explores how to integrate signaling servers and utilize

STUN and TURN servers

to ensure seamless communication behind different types of network configurations.Signaling in WebRTC

Signaling is the process by which two devices communicate preliminary information (like session control messages, network data, and media metadata) before establishing a peer-to-peer connection. WebRTC does not specify a particular signaling protocol or method, leaving developers free to implement signaling according to their application needs.

Implementing a Signaling Server:

Typically, WebRTC apps use WebSocket, XMPP, or SIP for signaling. For iOS, WebSocket is popular due to its simplicity and effectiveness in real-time communication scenarios.

swift

1import Starscream

2var socket: WebSocket!

3func setupWebSocket() {

4 var request = URLRequest(url: URL(string: "wss://your-signaling-server")!)

5 request.timeoutInterval = 5

6 socket = WebSocket(request: request)

7 socket.delegate = self

8 socket.connect()

9}

10extension YourClass: WebSocketDelegate {

11 func didReceive(event: WebSocketEvent, client: WebSocket) {

12 switch event {

13 case .connected(let headers):

14 print("websocket is connected: \\(headers)")

15 case .text(let string):

16 print("Received text: \\(string)")

17 default:

18 break

19 }

20 }

21}

22This code sets up a WebSocket connection and handles incoming and outgoing messages.

Handling NAT Traversal

NAT traversal is crucial for establishing connections between peers located behind different NATs. STUN and TURN servers play vital roles here:

STUN Server: A Session Traversal Utilities for NAT (STUN) server allows peers to discover their public IP address and the type of NAT they are behind, which is crucial for peer-to-peer connections.

TURN Server: When STUN isn't enough, for example in symmetric NAT situations, a Traversal Using Relays around NAT (TURN) server relays data between peers.

swift

1let configuration = RTCConfiguration()

2configuration.iceServers = [

3 RTCIceServer(urlStrings: ["stun:stun.l.google.com:19302"]),

4 RTCIceServer(urlStrings: ["turn:turn.anyfirewall.com:443?transport=tcp"],

5 username: "webrtc",

6 credential: "webrtc")

7 ]

8This configuration includes both STUN and TURN servers, ensuring that the peer connection can be established under various network conditions.

Testing and Optimization

Testing Connectivity:

Tools like

trickle ICE can be used to simulate and test how ICE (Interactive Connectivity Establishment) handles different network paths and configurations. This helps verify that your STUN/TURN setup is effective across diverse environments.Continuous Monitoring and Adjustment:

Monitor the effectiveness of your signaling and NAT traversal setup, especially as your user base grows and different network topologies come into play. Adjust configurations as needed based on performance data.

In the next section, we will delve into testing strategies and best practices for optimizing WebRTC applications to handle real-world scenarios effectively.

Testing and Optimization of WebRTC Applications on iOS

To ensure the stability and efficiency of your WebRTC application on iOS, rigorous testing and careful optimization are crucial. This final section outlines strategies for testing WebRTC functionalities and optimizing the application to handle real-world usage effectively.

Testing WebRTC Implementations

Testing is a vital step in the development process, ensuring that your application performs well across different devices and network conditions.

- Unit Testing: Test individual components or functions, such as the signaling process or data channel operations.

- Integration Testing: Ensure that various components of the WebRTC implementation work together seamlessly.

- End-to-End Testing: Using tools like Appium or XCUITest, simulate user interactions with the app to test the complete flow of WebRTC operations from end to end.

- Network Simulation: Tools such as Network Link Conditioner on iOS allow you to simulate different network conditions, including 3G, Wi-Fi, and high-latency networks, to see how your app performs under various scenarios.

Performance Optimization

Performance optimization focuses on enhancing the real-time communication experience by minimizing latency, reducing bandwidth usage, and improving overall application responsiveness.

- Optimize Media Quality: Dynamically adjust the resolution and bitrate of media streams based on the current network conditions to balance media quality with bandwidth availability.

- Hardware Acceleration: Leverage the device's hardware capabilities for tasks like video encoding and decoding to reduce CPU usage and conserve battery life.

- Memory Management: Monitor and optimize memory usage to prevent leaks and ensure that the application runs smoothly, even during long-duration calls.

User Experience Enhancement

Enhancing the user experience involves refining the interface and interactions to make the app intuitive and responsive.

- UI Responsiveness: Ensure that the user interface remains responsive during network operations and data processing. Use asynchronous programming models to keep the UI thread unblocked.

- Error Handling: Implement robust error handling to manage scenarios where network conditions change abruptly or connections drop unexpectedly. Provide clear, helpful user messages for different error states.

- Accessibility: Enhance accessibility features to accommodate all users, including those with disabilities. This includes voice-over support, high-contrast modes, and scalable text.

Continuous Monitoring and Feedback

Deploy monitoring tools to track application performance and user behavior in real time.

- Analytics and Logging: Use analytics to gather data on how users interact with your application and logging to capture any errors or issues that occur.

- Feedback Mechanisms: Implement feedback forms or in-app messaging to collect user inputs directly, which can be invaluable for identifying areas for improvement.

Remember to iterate based on user feedback and emerging technologies to keep your application at the forefront of WebRTC innovations.

With the insights and strategies discussed across these sections—from setting up your development environment to detailed testing and optimization—you are well-equipped to develop, launch, and refine a high-performing WebRTC-based app on iOS.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ