What is WebRTC?

Web Real-Time Communication (

WebRTC

) is an open-source project that provides web applications and mobile apps with real-time communication capabilities via simple APIs. It enables audio, video, and data sharing between browser clients (peers) without the need for intermediary servers, fostering seamless peer-to-peer interactions. Developed by Google, WebRTC has become a crucial component in modern web and mobile applications, facilitating functionalities like video conferencing, file sharing, and live streaming.Importance of WebRTC in Modern Web Applications

WebRTC stands out as a technology that simplifies the implementation of real-time audio and video communication. Its integration into web applications enhances user experiences by enabling instant communication directly within browsers, eliminating the need for external plugins or software. This capability is particularly valuable for applications in telehealth, remote work, online education, and social networking, where immediate, high-quality interactions are essential.

Overview of WebRTC Samples Available on GitHub

The WebRTC Samples repository on GitHub is an extensive collection of examples demonstrating the use of WebRTC APIs. These samples serve as practical guides for developers, showcasing how to implement various WebRTC features in JavaScript. From basic concepts like establishing a peer-to-peer connection to more advanced topics like adaptive bitrate streaming, the repository covers a broad spectrum of use cases. By providing hands-on examples, the WebRTC Samples repository helps developers quickly grasp and implement WebRTC functionalities, accelerating the development of robust real-time communication applications.

Let`s Start to Build WebRTC Samples Chat App with the help of JavaScript

Create a New WebRTC App

[a] Initial Setup and Prerequisites

Before diving into the code, ensure you have a development environment set up. You'll need a code editor (such as Visual Studio Code), Node.js, and npm (Node Package Manager) installed on your machine. These tools will help you manage dependencies and run your WebRTC application smoothly. Familiarity with JavaScript, HTML, and CSS is also recommended, as these are the core technologies used in WebRTC applications.

[b] Installing Necessary Dependencies

To start, clone the WebRTC samples repository from GitHub:

bash

1git clone https://github.com/webrtc/samples.git

2cd samples

3Next, install the required dependencies. If the project uses npm, navigate to the project directory and run:

bash

1npm install

2This command installs all the dependencies listed in the

package.json file, preparing your environment for development.Structure of the Project

Overview of the Folder Structure

Understanding the project's folder structure is crucial for effective development. The WebRTC samples repository typically includes directories for each sample, along with common assets and configuration files. Here's a breakdown of a typical folder structure:

- src/: Contains the source code for the samples.

- assets/: Includes media files like images, videos, and audio used in the samples.

- styles/: Contains CSS files for styling the samples.

- scripts/: Includes JavaScript files that implement the WebRTC functionalities.

- index.html: The main HTML file that serves as the entry point for the application.

Key Files and Their Purposes

- index.html: This file sets up the basic HTML structure and links to the necessary CSS and JavaScript files.

- main.js: The main JavaScript file where the WebRTC logic is implemented. This includes initializing peer connections, handling media streams, and managing signaling.

- styles.css: The CSS file for styling the user interface elements, ensuring a cohesive look and feel.

App Architecture

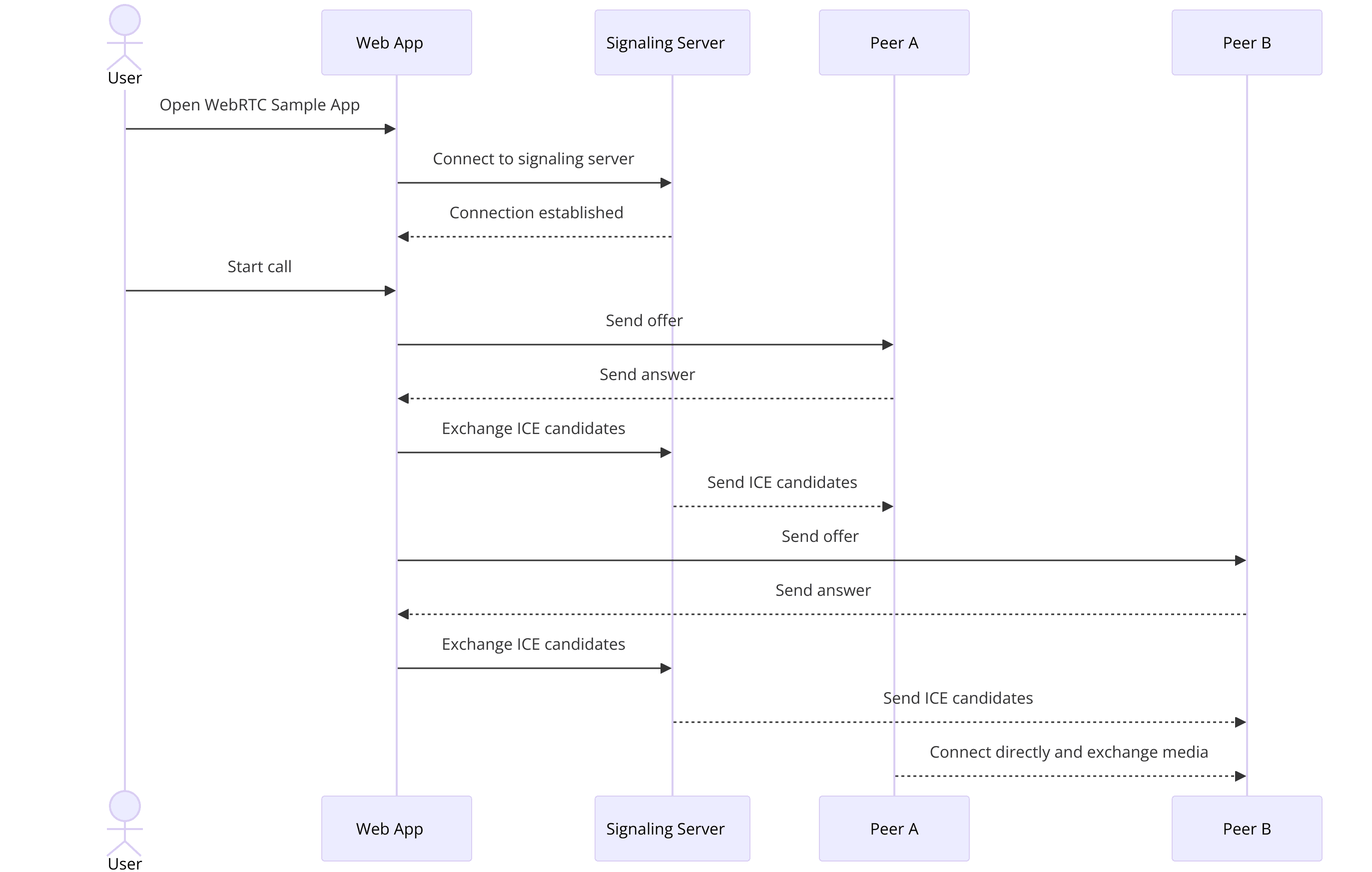

Explanation of the WebRTC App Architecture

The architecture of a WebRTC application involves several key components:

- Signaling Server: Facilitates the exchange of connection information between peers. It helps establish a connection but is not involved in the actual media transfer.

- Peer Connection: Represents the connection between two peers. It handles the transmission of media streams (audio and video) and data channels.

- Media Stream: Manages the capture and playback of media, such as audio and video. WebRTC provides APIs for accessing media devices like cameras and microphones.

- Data Channel: Allows for the transmission of arbitrary data directly between peers, enabling features like file sharing and text chat.

How Different Components Interact Within the App?

- User Interface: The HTML and CSS files define the layout and style of the application. Users interact with the UI to start calls, control media, and view participants.

- JavaScript Logic: The JavaScript files handle the core functionality, including setting up peer connections, managing signaling, and controlling media streams.

- Signaling Server: Although the signaling server is not directly part of the WebRTC samples, it's essential for initiating connections. It uses WebSockets or similar technologies to exchange session descriptions (SDP) and ICE candidates between peers.

By understanding the structure and architecture of a WebRTC application, you'll be better equipped to implement and customize the samples provided in the GitHub repository, paving the way for creating robust real-time communication applications.

Step 1: Get Started with index.html

Setting Up index.html

Basic HTML Structure for a WebRTC App

The

index.html file serves as the foundation of your WebRTC application. It sets up the basic HTML structure and links to the necessary CSS and JavaScript files. Below is an example of a simple index.html file for a WebRTC application:HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>WebRTC Sample App</title>

7 <link rel="stylesheet" href="styles.css">

8</head>

9<body>

10 <h1>WebRTC Sample Application</h1>

11 <div id="localVideoContainer">

12 <h2>Local Video</h2>

13 <video id="localVideo" autoplay playsinline></video>

14 </div>

15 <div id="remoteVideoContainer">

16 <h2>Remote Video</h2>

17 <video id="remoteVideo" autoplay playsinline></video>

18 </div>

19 <div id="controls">

20 <button id="startButton">Start</button>

21 <button id="callButton">Call</button>

22 <button id="hangupButton">Hang Up</button>

23 </div>

24 <script src="scripts/main.js"></script>

25</body>

26</html>

27In this HTML structure

- The

<head>section includes meta tags and links to the CSS stylesheet. - The

<body>contains a header, video containers for local and remote video streams, and control buttons. - The

<script>tag at the end links to the main JavaScript file (main.js) where the WebRTC logic will be implemented.

Including Necessary Scripts and Styles

Ensure you link to your CSS and JavaScript files correctly. The

styles.css file will handle the visual styling, while the main.js file will contain the WebRTC functionalities. Here’s an example of what these files might include:styles.css

CSS

1body {

2 font-family: Arial, sans-serif;

3 text-align: center;

4 margin: 0;

5 padding: 0;

6}

7

8video {

9 width: 80%;

10 max-width: 600px;

11 margin: 10px 0;

12}

13

14#controls {

15 margin-top: 20px;

16}

17This CSS ensures that the video elements are styled to fit nicely within the viewport and adds some basic styling to the controls.

main.js

JavaScript

1let localStream;

2let remoteStream;

3let peerConnection;

4

5const startButton = document.getElementById('startButton');

6const callButton = document.getElementById('callButton');

7const hangupButton = document.getElementById('hangupButton');

8const localVideo = document.getElementById('localVideo');

9const remoteVideo = document.getElementById('remoteVideo');

10

11startButton.onclick = start;

12callButton.onclick = call;

13hangupButton.onclick = hangUp;

14

15async function start() {

16 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

17 localVideo.srcObject = localStream;

18}

19

20async function call() {

21 // Setup peer connection and add local stream

22}

23

24function hangUp() {

25 // Close peer connection and stop streams

26}

27In

main.js:- We define variables for the local and remote streams and the peer connection.

- We get references to the buttons and video elements.

- We assign

onclickevent handlers to the buttons to call thestart,call, andhangUpfunctions. - The

startfunction captures the local media stream and attaches it to the local video element.

This initial setup in

index.html lays the groundwork for developing a full-fledged WebRTC application. By structuring your HTML correctly and linking to your CSS and JavaScript files, you ensure a clean and maintainable starting point for adding more complex WebRTC functionalities.Step 2: Wireframe All the Components

Creating the Wireframe

Designing the User Interface

A well-designed user interface (UI) is crucial for the usability and aesthetics of your WebRTC application. The wireframe is a visual guide that represents the skeletal framework of the application. Here's a step-by-step guide to creating the wireframe for your WebRTC app.

Layout Planning for WebRTC Components

- Header Section: A simple header that includes the title of the application.

- Video Containers: Two sections to display the local and remote video streams.

- Control Buttons: Buttons to start the video stream, initiate a call, and hang up the call.

Using CSS for Styling

To enhance the user interface, you can use CSS to style the components. Here’s an example of how to style the application:

index.html

HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>WebRTC Sample App</title>

7 <link rel="stylesheet" href="styles.css">

8</head>

9<body>

10 <header>

11 <h1>WebRTC Sample Application</h1>

12 </header>

13 <main>

14 <section id="localVideoContainer">

15 <h2>Local Video</h2>

16 <video id="localVideo" autoplay playsinline></video>

17 </section>

18 <section id="remoteVideoContainer">

19 <h2>Remote Video</h2>

20 <video id="remoteVideo" autoplay playsinline></video>

21 </section>

22 <div id="controls">

23 <button id="startButton">Start</button>

24 <button id="callButton">Call</button>

25 <button id="hangupButton">Hang Up</button>

26 </div>

27 </main>

28 <script src="scripts/main.js"></script>

29</body>

30</html>

31styles.css

CSS

1body {

2 font-family: Arial, sans-serif;

3 text-align: center;

4 margin: 0;

5 padding: 0;

6 background-color: #f4f4f9;

7}

8

9header {

10 background-color: #4CAF50;

11 color: white;

12 padding: 10px 0;

13}

14

15main {

16 padding: 20px;

17}

18

19section {

20 margin-bottom: 20px;

21}

22

23video {

24 width: 80%;

25 max-width: 600px;

26 border: 2px solid #ccc;

27 box-shadow: 0 2px 8px rgba(0, 0, 0, 0.1);

28}

29

30#controls {

31 margin-top: 20px;

32}

33

34button {

35 margin: 5px;

36 padding: 10px 20px;

37 font-size: 16px;

38 border: none;

39 border-radius: 5px;

40 background-color: #4CAF50;

41 color: white;

42 cursor: pointer;

43}

44

45button:hover {

46 background-color: #45a049;

47}

48This CSS enhances the visual appearance of the app by:

- Setting a background color for the body.

- Styling the header with a green background and white text.

- Adding padding and margin to the main content and sections.

- Applying a border and shadow to video elements for better visibility.

- Styling buttons with a green background, white text, and hover effects.

By following this wireframe and styling guide, you can create a visually appealing and user-friendly interface for your WebRTC application. This foundation will make it easier to integrate and test WebRTC functionalities in a structured environment.

Step 3: Implement Join Screen

Join Screen Implementation

HTML Structure for the Join Screen

To enable users to join a WebRTC session, you need to create a join screen where they can enter necessary information, such as their name and the session ID. Here’s an example of how to set up the HTML for the join screen:

HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>WebRTC Sample App</title>

7 <link rel="stylesheet" href="styles.css">

8</head>

9<body>

10 <header>

11 <h1>WebRTC Sample Application</h1>

12 </header>

13 <main>

14 <section id="joinScreen">

15 <h2>Join a Session</h2>

16 <form id="joinForm">

17 <input type="text" id="name" placeholder="Enter your name" required>

18 <input type="text" id="sessionId" placeholder="Enter session ID" required>

19 <button type="submit">Join</button>

20 </form>

21 </section>

22 <section id="localVideoContainer" style="display: none;">

23 <h2>Local Video</h2>

24 <video id="localVideo" autoplay playsinline></video>

25 </section>

26 <section id="remoteVideoContainer" style="display: none;">

27 <h2>Remote Video</h2>

28 <video id="remoteVideo" autoplay playsinline></video>

29 </section>

30 <div id="controls" style="display: none;">

31 <button id="startButton">Start</button>

32 <button id="callButton">Call</button>

33 <button id="hangupButton">Hang Up</button>

34 </div>

35 </main>

36 <script src="scripts/main.js"></script>

37</body>

38</html>

39In this structure:

- The

joinScreensection contains a form for users to enter their name and session ID. - The video containers and control buttons are hidden by default and will be shown after the user joins a session.

JavaScript Functionality for User Inputs

Next, you need to handle the form submission and manage user inputs using JavaScript. Here’s how you can do it in

main.js:JavaScript

1const joinForm = document.getElementById('joinForm');

2const joinScreen = document.getElementById('joinScreen');

3const localVideoContainer = document.getElementById('localVideoContainer');

4const remoteVideoContainer = document.getElementById('remoteVideoContainer');

5const controls = document.getElementById('controls');

6

7joinForm.addEventListener('submit', async (event) => {

8 event.preventDefault();

9

10 const name = document.getElementById('name').value;

11 const sessionId = document.getElementById('sessionId').value;

12

13 if (name && sessionId) {

14 // Hide the join screen

15 joinScreen.style.display = 'none';

16

17 // Show the video containers and controls

18 localVideoContainer.style.display = 'block';

19 remoteVideoContainer.style.display = 'block';

20 controls.style.display = 'block';

21

22 // Initialize WebRTC connection here

23 await start();

24 }

25});

26

27let localStream;

28let remoteStream;

29let peerConnection;

30

31const startButton = document.getElementById('startButton');

32const callButton = document.getElementById('callButton');

33const hangupButton = document.getElementById('hangupButton');

34const localVideo = document.getElementById('localVideo');

35const remoteVideo = document.getElementById('remoteVideo');

36

37startButton.onclick = start;

38callButton.onclick = call;

39hangupButton.onclick = hangUp;

40

41async function start() {

42 try {

43 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

44 localVideo.srcObject = localStream;

45 } catch (error) {

46 console.error('Error accessing media devices.', error);

47 }

48}

49

50async function call() {

51 // Setup peer connection and add local stream

52}

53

54function hangUp() {

55 // Close peer connection and stop streams

56}

57Connecting to the Signaling Server

A signaling server is essential for WebRTC applications to exchange session descriptions and ICE candidates between peers. Here’s a simple implementation using WebSockets:

[a] Set up the WebSocket connection

JavaScript

1 const signalingServerUrl = 'wss://your-signaling-server-url';

2 const signalingSocket = new WebSocket(signalingServerUrl);

3

4 signalingSocket.onopen = () => {

5 console.log('Connected to the signaling server');

6 };

7

8 signalingSocket.onmessage = (message) => {

9 const data = JSON.parse(message.data);

10 handleSignalingData(data);

11 };

12

13 function handleSignalingData(data) {

14 // Handle the received signaling data (SDP and ICE candidates)

15 }

16[b] Send signaling data

JavaScript

1 function sendSignalingData(data) {

2 signalingSocket.send(JSON.stringify(data));

3 }

4[c] Exchange SDP and ICE candidates

JavaScript

1 async function call() {

2 peerConnection = new RTCPeerConnection();

3

4 peerConnection.onicecandidate = (event) => {

5 if (event.candidate) {

6 sendSignalingData({

7 type: 'candidate',

8 candidate: event.candidate,

9 });

10 }

11 };

12

13 peerConnection.ontrack = (event) => {

14 remoteVideo.srcObject = event.streams[0];

15 };

16

17 localStream.getTracks().forEach((track) => {

18 peerConnection.addTrack(track, localStream);

19 });

20

21 const offer = await peerConnection.createOffer();

22 await peerConnection.setLocalDescription(offer);

23

24 sendSignalingData({

25 type: 'offer',

26 offer: offer,

27 });

28 }

29

30 signalingSocket.onmessage = (message) => {

31 const data = JSON.parse(message.data);

32

33 switch (data.type) {

34 case 'offer':

35 handleOffer(data.offer);

36 break;

37 case 'answer':

38 handleAnswer(data.answer);

39 break;

40 case 'candidate':

41 handleCandidate(data.candidate);

42 break;

43 default:

44 break;

45 }

46 };

47

48 async function handleOffer(offer) {

49 peerConnection = new RTCPeerConnection();

50

51 peerConnection.onicecandidate = (event) => {

52 if (event.candidate) {

53 sendSignalingData({

54 type: 'candidate',

55 candidate: event.candidate,

56 });

57 }

58 };

59

60 peerConnection.ontrack = (event) => {

61 remoteVideo.srcObject = event.streams[0];

62 };

63

64 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

65

66 localStream.getTracks().forEach((track) => {

67 peerConnection.addTrack(track, localStream);

68 });

69

70 const answer = await peerConnection.createAnswer();

71 await peerConnection.setLocalDescription(answer);

72

73 sendSignalingData({

74 type: 'answer',

75 answer: answer,

76 });

77 }

78

79 async function handleAnswer(answer) {

80 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

81 }

82

83 async function handleCandidate(candidate) {

84 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

85 }

86This implementation sets up the join screen, handles user inputs, and establishes a connection to the signaling server. The next steps will involve integrating these functionalities into a cohesive WebRTC application.

Step 4: Implement Controls

Controls Implementation

Adding Video and Audio Controls

To provide a seamless user experience, adding controls for managing video and audio streams is essential. These controls enable users to start and stop their video or audio streams as needed.

HTML Structure for Controls

The HTML structure for controls is already included in the previous sections. It includes buttons for starting the video stream, initiating a call, and hanging up the call. Here’s a quick recap:

HTML

1<div id="controls" style="display: none;">

2 <button id="startButton">Start</button>

3 <button id="callButton">Call</button>

4 <button id="hangupButton">Hang Up</button>

5</div>

6JavaScript Code for Handling Controls

We will enhance the existing JavaScript code to implement the functionality of these buttons.

main.js:

JavaScript

1let localStream;

2let remoteStream;

3let peerConnection;

4const startButton = document.getElementById('startButton');

5const callButton = document.getElementById('callButton');

6const hangupButton = document.getElementById('hangupButton');

7const localVideo = document.getElementById('localVideo');

8const remoteVideo = document.getElementById('remoteVideo');

9

10startButton.onclick = start;

11callButton.onclick = call;

12hangupButton.onclick = hangUp;

13

14async function start() {

15 try {

16 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

17 localVideo.srcObject = localStream;

18 console.log('Local stream started');

19 } catch (error) {

20 console.error('Error accessing media devices.', error);

21 }

22}

23

24async function call() {

25 peerConnection = new RTCPeerConnection();

26

27 peerConnection.onicecandidate = (event) => {

28 if (event.candidate) {

29 sendSignalingData({

30 type: 'candidate',

31 candidate: event.candidate,

32 });

33 }

34 };

35

36 peerConnection.ontrack = (event) => {

37 remoteVideo.srcObject = event.streams[0];

38 };

39

40 localStream.getTracks().forEach((track) => {

41 peerConnection.addTrack(track, localStream);

42 });

43

44 try {

45 const offer = await peerConnection.createOffer();

46 await peerConnection.setLocalDescription(offer);

47 sendSignalingData({

48 type: 'offer',

49 offer: offer,

50 });

51 console.log('Call initiated');

52 } catch (error) {

53 console.error('Error creating offer.', error);

54 }

55}

56

57function hangUp() {

58 if (peerConnection) {

59 peerConnection.close();

60 peerConnection = null;

61 console.log('Call ended');

62 }

63

64 // Stop all local stream tracks

65 localStream.getTracks().forEach(track => track.stop());

66 localVideo.srcObject = null;

67 remoteVideo.srcObject = null;

68}

69User Experience Enhancements

To improve user experience, you can add visual feedback to indicate the status of the call and controls. For example, you can disable the "Call" button once the call is initiated and enable the "Hang Up" button.

Enhanced JavaScript Code for User Experience

JavaScript

1startButton.onclick = start;

2callButton.onclick = call;

3hangupButton.onclick = hangUp;

4

5async function start() {

6 try {

7 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

8 localVideo.srcObject = localStream;

9 startButton.disabled = true;

10 callButton.disabled = false;

11 console.log('Local stream started');

12 } catch (error) {

13 console.error('Error accessing media devices.', error);

14 }

15}

16

17async function call() {

18 peerConnection = new RTCPeerConnection();

19

20 peerConnection.onicecandidate = (event) => {

21 if (event.candidate) {

22 sendSignalingData({

23 type: 'candidate',

24 candidate: event.candidate,

25 });

26 }

27 };

28

29 peerConnection.ontrack = (event) => {

30 remoteVideo.srcObject = event.streams[0];

31 };

32

33 localStream.getTracks().forEach((track) => {

34 peerConnection.addTrack(track, localStream);

35 });

36

37 try {

38 const offer = await peerConnection.createOffer();

39 await peerConnection.setLocalDescription(offer);

40 sendSignalingData({

41 type: 'offer',

42 offer: offer,

43 });

44 callButton.disabled = true;

45 hangupButton.disabled = false;

46 console.log('Call initiated');

47 } catch (error) {

48 console.error('Error creating offer.', error);

49 }

50}

51

52function hangUp() {

53 if (peerConnection) {

54 peerConnection.close();

55 peerConnection = null;

56 console.log('Call ended');

57 }

58

59 // Stop all local stream tracks

60 localStream.getTracks().forEach(track => track.stop());

61 localVideo.srcObject = null;

62 remoteVideo.srcObject = null;

63

64 startButton.disabled = false;

65 callButton.disabled = true;

66 hangupButton.disabled = true;

67}

68In this code:

- The

startButtonis disabled after starting the local stream. - The

callButtonis enabled after starting the local stream and disabled after initiating the call. - The

hangupButtonis enabled after the call is initiated and disabled after hanging up.

By implementing these controls and user experience enhancements, you provide users with a more intuitive and responsive WebRTC application. This setup ensures that users can easily manage their video and audio streams and have a clear understanding of the application's state during a call.

Step 5: Implement Participant View

Participant View Implementation

Displaying Video Streams

To create a robust WebRTC application, it's essential to effectively display video streams from multiple participants. This involves capturing the local stream, displaying it, and then dynamically updating the UI to include streams from remote participants.

HTML Structure

The HTML structure for the participant view has already been partially set up. Here's a recap with minor adjustments to accommodate multiple participants:

HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>WebRTC Sample App</title>

7 <link rel="stylesheet" href="styles.css">

8</head>

9<body>

10 <header>

11 <h1>WebRTC Sample Application</h1>

12 </header>

13 <main>

14 <section id="joinScreen">

15 <h2>Join a Session</h2>

16 <form id="joinForm">

17 <input type="text" id="name" placeholder="Enter your name" required>

18 <input type="text" id="sessionId" placeholder="Enter session ID" required>

19 <button type="submit">Join</button>

20 </form>

21 </section>

22 <section id="localVideoContainer" style="display: none;">

23 <h2>Local Video</h2>

24 <video id="localVideo" autoplay playsinline></video>

25 </section>

26 <section id="remoteVideosContainer" style="display: none;">

27 <h2>Remote Videos</h2>

28 <div id="remoteVideos"></div>

29 </section>

30 <div id="controls" style="display: none;">

31 <button id="startButton">Start</button>

32 <button id="callButton">Call</button>

33 <button id="hangupButton">Hang Up</button>

34 </div>

35 </main>

36 <script src="scripts/main.js"></script>

37</body>

38</html>

39JavaScript Code for Handling Multiple Participants

To handle multiple participants, update the JavaScript code to manage multiple video elements dynamically. Here’s how you can do it in

main.js:main.js:

JavaScript

1let localStream;

2let peerConnections = {}; // Store peer connections for each participant

3const startButton = document.getElementById('startButton');

4const callButton = document.getElementById('callButton');

5const hangupButton = document.getElementById('hangupButton');

6const localVideo = document.getElementById('localVideo');

7const remoteVideos = document.getElementById('remoteVideos');

8

9startButton.onclick = start;

10callButton.onclick = call;

11hangupButton.onclick = hangUp;

12

13async function start() {

14 try {

15 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

16 localVideo.srcObject = localStream;

17 startButton.disabled = true;

18 callButton.disabled = false;

19 console.log('Local stream started');

20 } catch (error) {

21 console.error('Error accessing media devices.', error);

22 }

23}

24

25async function call() {

26 const signalingServerUrl = 'wss://your-signaling-server-url';

27 const signalingSocket = new WebSocket(signalingServerUrl);

28

29 signalingSocket.onopen = () => {

30 console.log('Connected to the signaling server');

31 };

32

33 signalingSocket.onmessage = (message) => {

34 const data = JSON.parse(message.data);

35 handleSignalingData(data);

36 };

37

38 signalingSocket.onerror = (error) => {

39 console.error('Signaling error:', error);

40 };

41

42 signalingSocket.onclose = () => {

43 console.log('Signaling connection closed');

44 };

45

46 function handleSignalingData(data) {

47 switch (data.type) {

48 case 'offer':

49 handleOffer(data.offer, data.sender);

50 break;

51 case 'answer':

52 handleAnswer(data.answer, data.sender);

53 break;

54 case 'candidate':

55 handleCandidate(data.candidate, data.sender);

56 break;

57 default:

58 break;

59 }

60 }

61

62 function sendSignalingData(data) {

63 signalingSocket.send(JSON.stringify(data));

64 }

65

66 function createPeerConnection(participantId) {

67 const peerConnection = new RTCPeerConnection();

68

69 peerConnection.onicecandidate = (event) => {

70 if (event.candidate) {

71 sendSignalingData({

72 type: 'candidate',

73 candidate: event.candidate,

74 sender: participantId,

75 });

76 }

77 };

78

79 peerConnection.ontrack = (event) => {

80 const remoteVideo = document.createElement('video');

81 remoteVideo.srcObject = event.streams[0];

82 remoteVideo.autoplay = true;

83 remoteVideo.playsinline = true;

84 remoteVideos.appendChild(remoteVideo);

85 };

86

87 localStream.getTracks().forEach((track) => {

88 peerConnection.addTrack(track, localStream);

89 });

90

91 return peerConnection;

92 }

93

94 async function handleOffer(offer, sender) {

95 const peerConnection = createPeerConnection(sender);

96 peerConnections[sender] = peerConnection;

97

98 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

99 const answer = await peerConnection.createAnswer();

100 await peerConnection.setLocalDescription(answer);

101

102 sendSignalingData({

103 type: 'answer',

104 answer: answer,

105 sender: sender,

106 });

107 }

108

109 async function handleAnswer(answer, sender) {

110 const peerConnection = peerConnections[sender];

111 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

112 }

113

114 async function handleCandidate(candidate, sender) {

115 const peerConnection = peerConnections[sender];

116 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

117 }

118

119 peerConnections['local'] = createPeerConnection('local');

120 const offer = await peerConnections['local'].createOffer();

121 await peerConnections['local'].setLocalDescription(offer);

122

123 sendSignalingData({

124 type: 'offer',

125 offer: offer,

126 sender: 'local',

127 });

128

129 callButton.disabled = true;

130 hangupButton.disabled = false;

131 console.log('Call initiated');

132}

133

134function hangUp() {

135 Object.keys(peerConnections).forEach((id) => {

136 peerConnections[id].close();

137 delete peerConnections[id];

138 });

139

140 remoteVideos.innerHTML = '';

141 localStream.getTracks().forEach(track => track.stop());

142 localVideo.srcObject = null;

143

144 startButton.disabled = false;

145 callButton.disabled = true;

146 hangupButton.disabled = true;

147

148 console.log('Call ended');

149}

150Explanation of the JavaScript Code

- start: This function captures the local media stream and attaches it to the local video element. It also updates the UI by disabling the start button and enabling the call button.

- call: This function connects to the signaling server using WebSockets, handles incoming signaling messages, and manages peer connections for multiple participants. It creates a new peer connection for each participant and sends offers and answers as needed.

- handleSignalingData: This function processes incoming signaling messages, such as offers, answers, and ICE candidates, and directs them to the appropriate handler functions.

- createPeerConnection: This function creates a new RTCPeerConnection, sets up event handlers for ICE candidates and track events, and adds local tracks to the connection.

- handleOffer: This function handles incoming offers, creates a new peer connection, sets the remote description, and sends an answer back to the sender.

- handleAnswer: This function sets the remote description for incoming answers.

- handleCandidate: This function adds ICE candidates to the appropriate peer connection.

- hangUp: This function closes all peer connections, stops local media tracks, clears the remote videos, and updates the UI.

By implementing these steps, you can create a WebRTC application that supports multiple participants and dynamically updates the participant view, providing a robust and scalable real-time communication solution.

Conclusion

In this article, we walked through the process of creating a WebRTC application using the WebRTC samples available on GitHub. We started by setting up the basic HTML structure, then implemented a join screen, added video and audio controls, and finally handled multiple participants in the participant view. By following these steps, you can build a robust WebRTC application that supports real-time communication with multiple participants. Exploring and expanding upon these samples will help you understand WebRTC's capabilities and apply them to your own projects.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ