Introduction to Synapse WebRTC Technology

What is Synapse WebRTC?

In the realm of modern communication, Synapse and WebRTC stand out as revolutionary technologies designed to foster secure and decentralized interactions. Synapse, an open-source homeserver implementation of the Matrix protocol, provides a robust framework for decentralized communication. The Matrix protocol itself is an open standard for decentralized, real-time communication, designed to securely link together a variety of communication systems. Synapse acts as the backbone for this protocol, enabling users to host their own servers and maintain control over their data.

On the other hand, WebRTC (Web Real-Time Communication) is a cutting-edge technology that enables real-time audio, video, and data sharing directly between browsers and devices. It eliminates the need for intermediary servers, allowing for faster and more secure peer-to-peer communication. WebRTC is widely adopted in applications such as video conferencing, live streaming, and online gaming due to its low latency and high-quality media transmission capabilities.

Why Integrate Synapse with WebRTC?

The integration of Synapse with WebRTC combines the strengths of both technologies, offering a powerful solution for secure, decentralized real-time communication. By leveraging Synapse's decentralized architecture, users can ensure their communication data remains under their control, free from the vulnerabilities associated with centralized servers. WebRTC enhances this setup by providing seamless, high-quality media streaming directly between users, ensuring that conversations are not only secure but also smooth and responsive.

Overview of Synapse WebRTC Integration

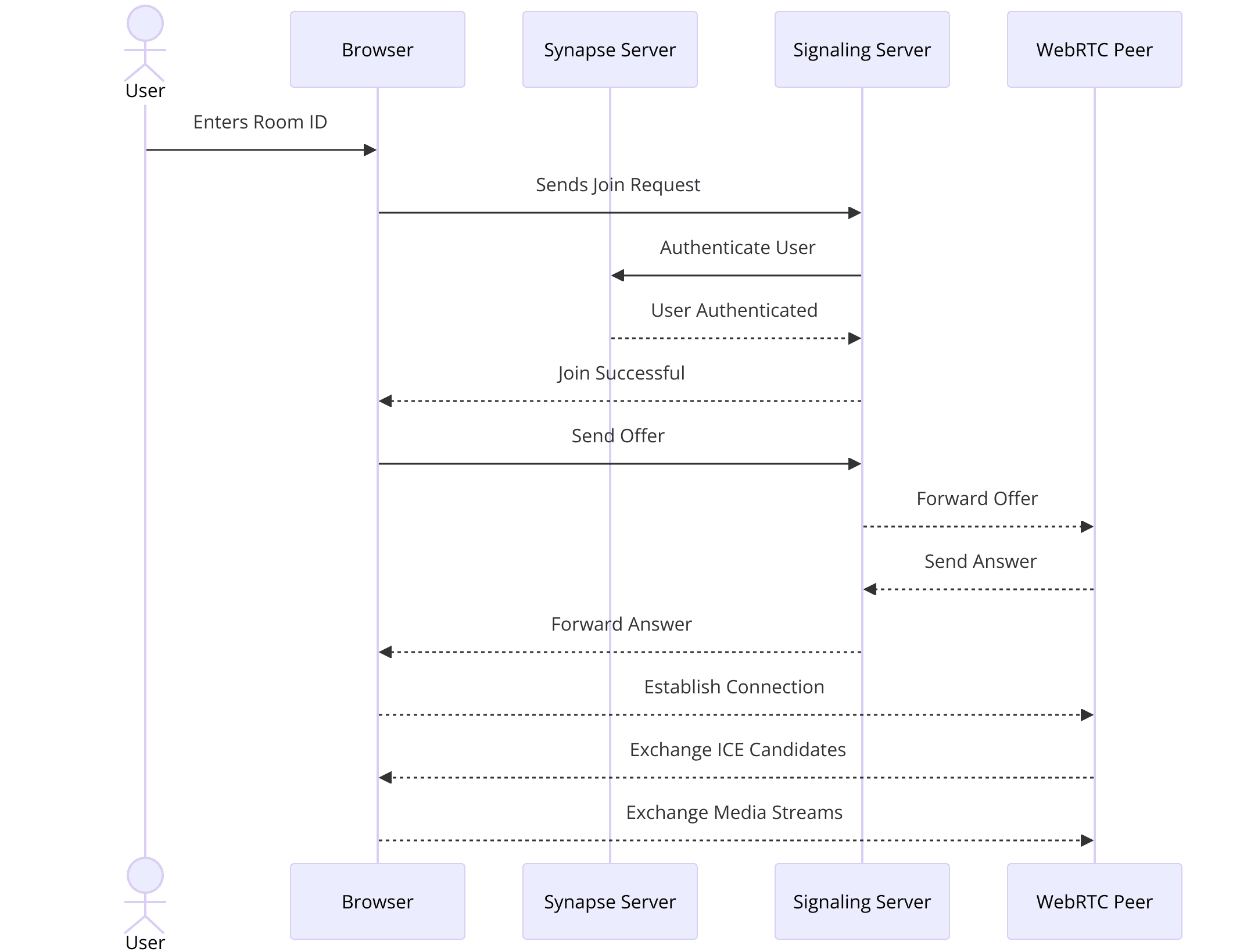

Integrating Synapse with WebRTC involves setting up a Synapse server to manage user identities, sessions, and signaling, while WebRTC handles the direct media transmission between participants. This combination allows developers to build applications that provide end-to-end encrypted communication channels, preserving privacy and security. Whether for personal use, enterprise communication, or public platforms, Synapse WebRTC offers a versatile and secure solution for modern communication needs.

In the following sections, we will delve into the step-by-step process of creating a Synapse WebRTC application, covering everything from setting up the development environment to implementing essential features such as join screens, controls, and participant views.

Getting Started with the Code

In this section, we will walk you through the initial setup and structure of a Synapse WebRTC application. By the end of this part, you should have a working development environment and a clear understanding of the project's architecture.

Create a New Synapse WebRTC App

[a] Set Up the Development Environment

Ensure you have Python installed on your system. You can download it from

python.org

.Install virtualenv to create an isolated Python environment:

bash

1 pip install virtualenv

2Create a virtual environment for your project:

bash

1 virtualenv synapse-webrtc-env

2Activate the virtual environment:

On Windows:

bash title="bash"

.\synapse-webrtc-env\Scripts\activate

On macOS/Linux:bash

1 source synapse-webrtc-env/bin/activate

2[b] Clone the Synapse Repository

Navigate to the directory where you want to set up the project and clone the Synapse repository from GitHub:

bash

1 git clone https://github.com/matrix-org/synapse.git

2Change into the Synapse directory:

bash

1 cd synapse

2[c] Install Dependencies

Install the required Python packages:

bash

1 pip install -r requirements.txt

2Structure of the Project

Understanding the project's folder structure is crucial for efficient development. Here’s an overview of the key directories and files:

synapse/: The main directory containing all Synapse server code.

- synapse/app/: Contains the main application logic.

- synapse/api/: Defines the API endpoints and their logic.

- synapse/handlers/: Contains handlers for various functionalities such as messaging, presence, and more.

- synapse/rest/: Contains REST APIs for interacting with the Synapse server.

- synapse/storage/: Manages database interactions and storage.

- config/: Configuration files for setting up and customizing the Synapse server.

- scripts/: Utility scripts for managing and maintaining the Synapse server.

App Architecture

- Synapse Server: Manages user identities, authentication, and signaling for WebRTC connections. It ensures that all communication channels are secure and decentralized.

- WebRTC: Handles the peer-to-peer media transmission between users. WebRTC APIs facilitate real-time audio, video, and data sharing directly between devices.

- Frontend Interface: The user interface where participants interact. This can be a web or mobile application that integrates with the Synapse server and utilizes WebRTC for media transmission.

By following this structure, you can ensure that your application is modular, maintainable, and scalable. Each component has a specific role, and understanding these roles will help in efficiently developing and troubleshooting your Synapse WebRTC application.

In the next section, we will start by setting up the main script for initializing the Synapse server and configuring it for WebRTC integration. Stay tuned!

Step 1: Get Started with Main.py

In this section, we will set up the main script to initialize the Synapse server and configure it for WebRTC integration. This step involves writing the initial code to get your server up and running, ensuring it can handle WebRTC signaling and user authentication.

Setup Main.py

Initialize the Synapse Server

First, create a new file named

main.py in the root directory of your project.Import the necessary modules and initialize the Synapse server:

Python

1 import os

2 import logging

3 from synapse.app.homeserver import SynapseHomeServer

4 from synapse.config.homeserver import HomeServerConfig

5 from synapse.util.logcontext import LoggingContext

6

7 # Set up logging

8 logging.basicConfig(level=logging.INFO)

9 logger = logging.getLogger(__name__)

10

11 # Configuration settings

12 config_path = "homeserver.yaml"

13 config = HomeServerConfig.load_config("Synapse", ["-c", config_path])

14

15 # Initialize the Synapse server

16 server = SynapseHomeServer(

17 hostname=config.server_name,

18 config=config,

19 version_string="Synapse",

20 )

21

22 logger.info("Synapse server initialized successfully")

23Configuration Settings for WebRTC Integration

Update your

homeserver.yaml configuration file to include settings specific to WebRTC signaling:YAML

1 webclient:

2 webclient_location: "http://localhost:8080"

3 signaling:

4 enabled: true

5 bind_addresses: ["0.0.0.0"]

6 port: 8443

7This configuration ensures that the Synapse server is set up to handle WebRTC signaling on port 8443 and is accessible from any IP address.

Run the Synapse Server

Add the code to start the Synapse server at the end of your

main.py file:Python

1 if __name__ == "__main__":

2 with LoggingContext("main"):

3 server.start()

4 logger.info("Synapse server running")

5Run your

main.py script to start the server:bash

1 python main.py

2Explanation of the Initialization Process

- Logging Setup: The logging configuration helps in tracking the server activities and debugging any issues.

- Configuration Loading: The

HomeServerConfig.load_configfunction loads the configuration settings fromhomeserver.yaml, which includes parameters for both Synapse and WebRTC. - Server Initialization: The

SynapseHomeServerclass is instantiated with the server name and configuration, preparing the server to handle incoming requests. - Server Start: The

server.start()method begins running the Synapse server, making it ready to manage user sessions and WebRTC signaling.

With the Synapse server up and running, you have laid the groundwork for your WebRTC application. The server is now capable of handling user identities, authentication, and signaling required for real-time communication. In the next section, we will move on to wireframing all the components necessary for a fully functional Synapse WebRTC application.

By following these steps, you ensure a solid start for your project, setting the stage for implementing advanced features and functionalities.

Step 2: Wireframe All the Components

In this step, we'll wireframe the key components of your Synapse WebRTC application. Wireframing helps in visualizing the structure and interaction between different parts of your application, ensuring that all necessary components are in place before diving into detailed implementation.

Component Wireframing

Signaling Server

- The signaling server is a crucial component in WebRTC applications. It facilitates the exchange of signaling data (like session descriptions and ICE candidates) between peers to establish a direct connection.

- We’ll extend our

main.pyto include a basic signaling server setup.

Media Streams

- WebRTC handles media streams (audio, video, and data) between peers. We will set up the basic structure to manage these streams.

- This involves creating functions to handle the initiation, reception, and termination of media streams.

User Interface Components

- The user interface includes elements like the join screen, controls (mute, unmute, video on/off), and participant views.

- Each component will be wireframed to understand how they interact with the Synapse server and WebRTC functionalities.

Basic Wireframing with Code Snippets

Signaling Server Setup

Add the signaling server setup to your

main.py:Python

1 from aiohttp import web

2

3 async def handle_offer(request):

4 offer = await request.json()

5 # Process the offer and generate an answer

6 answer = process_offer(offer)

7 return web.json_response({"sdp": answer.sdp, "type": answer.type})

8

9 async def handle_ice_candidate(request):

10 candidate = await request.json()

11 # Process the ICE candidate

12 process_ice_candidate(candidate)

13 return web.Response(text="Candidate received")

14

15 app = web.Application()

16 app.router.add_post('/offer', handle_offer)

17 app.router.add_post('/candidate', handle_ice_candidate)

18

19 web.run_app(app, port=8443)

20Handling Media Streams

Create a module,

webrtc.py, to handle media streams:Python

1 import asyncio

2 from aiortc import RTCPeerConnection, RTCSessionDescription

3

4 pcs = set()

5

6 async def process_offer(offer):

7 pc = RTCPeerConnection()

8 pcs.add(pc)

9

10 @pc.on("icecandidate")

11 def on_icecandidate(event):

12 # Send the candidate to the remote peer

13 pass

14

15 @pc.on("track")

16 def on_track(event):

17 # Handle the received media stream

18 pass

19

20 offer_desc = RTCSessionDescription(sdp=offer["sdp"], type=offer["type"])

21 await pc.setRemoteDescription(offer_desc)

22 answer = await pc.createAnswer()

23 await pc.setLocalDescription(answer)

24

25 return pc.localDescription

26

27 async def process_ice_candidate(candidate):

28 # Add the received ICE candidate to the peer connection

29 pass

30

31 async def cleanup():

32 # Clean up closed peer connections

33 while True:

34 await asyncio.sleep(10)

35 for pc in list(pcs):

36 if pc.iceConnectionState == "closed":

37 pcs.discard(pc)

38User Interface Components

Wireframe the basic HTML structure for the join screen and controls:

HTML

1 <!DOCTYPE html>

2 <html lang="en">

3 <head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Synapse WebRTC</title>

7 </head>

8 <body>

9 <div id="join-screen">

10 <h2>Join a Room</h2>

11 <input type="text" id="room-id" placeholder="Enter Room ID">

12 <button onclick="joinRoom()">Join</button>

13 </div>

14 <div id="controls">

15 <button onclick="toggleMute()">Mute/Unmute</button>

16 <button onclick="toggleVideo()">Video On/Off</button>

17 </div>

18 <div id="participant-view">

19 <!-- Video streams will be displayed here -->

20 </div>

21

22 <script src="webrtc.js"></script>

23 </body>

24 </html>

25By setting up these wireframes, you establish a clear blueprint for your Synapse WebRTC application. Each component's role and interaction with the server and other parts of the application are defined, paving the way for detailed implementation in the subsequent steps.

In the next section, we will focus on implementing the join screen, which will allow users to enter a room ID and join a communication session. This step is crucial for initiating the WebRTC connection and starting the real-time communication process.

Step 3: Implement Join Screen

The join screen is the interface where users enter a room ID to join a communication session. This step involves creating the frontend elements for the join screen and connecting it to the backend to handle user inputs and initiate WebRTC connections.

Join Screen Implementation

[a] HTML for Join Screen

Create an HTML file named

index.html with the following content:HTML

1 <!DOCTYPE html>

2 <html lang="en">

3 <head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Synapse WebRTC</title>

7 <style>

8 #join-screen, #controls, #participant-view {

9 display: none;

10 }

11 </style>

12 </head>

13 <body>

14 <div id="join-screen">

15 <h2>Join a Room</h2>

16 <input type="text" id="room-id" placeholder="Enter Room ID">

17 <button onclick="joinRoom()">Join</button>

18 </div>

19 <div id="controls">

20 <button onclick="toggleMute()">Mute/Unmute</button>

21 <button onclick="toggleVideo()">Video On/Off</button>

22 </div>

23 <div id="participant-view">

24 <!-- Video streams will be displayed here -->

25 </div>

26

27 <script src="webrtc.js"></script>

28 </body>

29 </html>

30[b] JavaScript for Join Screen Functionality

Create a JavaScript file named

webrtc.js and add the following code:JavaScript

1 let localStream;

2 let pc;

3

4 async function joinRoom() {

5 const roomId = document.getElementById('room-id').value;

6 if (!roomId) {

7 alert("Please enter a room ID");

8 return;

9 }

10

11 document.getElementById('join-screen').style.display = 'none';

12 document.getElementById('controls').style.display = 'block';

13 document.getElementById('participant-view').style.display = 'block';

14

15 await startWebRTC();

16 }

17

18 async function startWebRTC() {

19 pc = new RTCPeerConnection();

20

21 pc.onicecandidate = (event) => {

22 if (event.candidate) {

23 sendIceCandidate(event.candidate);

24 }

25 };

26

27 pc.ontrack = (event) => {

28 const videoElement = document.createElement('video');

29 videoElement.srcObject = event.streams[0];

30 videoElement.autoplay = true;

31 document.getElementById('participant-view').appendChild(videoElement);

32 };

33

34 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

35 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

36

37 const offer = await pc.createOffer();

38 await pc.setLocalDescription(offer);

39

40 const response = await fetch('/offer', {

41 method: 'POST',

42 headers: {

43 'Content-Type': 'application/json'

44 },

45 body: JSON.stringify({ sdp: pc.localDescription.sdp, type: pc.localDescription.type })

46 });

47

48 const answer = await response.json();

49 await pc.setRemoteDescription(new RTCSessionDescription(answer));

50 }

51

52 async function sendIceCandidate(candidate) {

53 await fetch('/candidate', {

54 method: 'POST',

55 headers: {

56 'Content-Type': 'application/json'

57 },

58 body: JSON.stringify(candidate)

59 });

60 }

61[c] Backend Handling of Join Requests

Ensure that your

main.py is set up to handle signaling requests as shown previously. Here’s a quick reminder of how your signaling handlers should look:Python

1 from aiohttp import web

2 import asyncio

3 from aiortc import RTCPeerConnection, RTCSessionDescription

4

5 pcs = set()

6

7 async def handle_offer(request):

8 offer = await request.json()

9 pc = RTCPeerConnection()

10 pcs.add(pc)

11

12 @pc.on("icecandidate")

13 def on_icecandidate(event):

14 if event.candidate:

15 # Send the candidate to the remote peer

16 pass

17

18 @pc.on("track")

19 def on_track(event):

20 # Handle the received media stream

21 pass

22

23 offer_desc = RTCSessionDescription(sdp=offer["sdp"], type=offer["type"])

24 await pc.setRemoteDescription(offer_desc)

25 answer = await pc.createAnswer()

26 await pc.setLocalDescription(answer)

27

28 return web.json_response({"sdp": pc.localDescription.sdp, "type": pc.localDescription.type})

29

30 async def handle_ice_candidate(request):

31 candidate = await request.json()

32 # Add the received ICE candidate to the peer connection

33 pass

34

35 app = web.Application()

36 app.router.add_post('/offer', handle_offer)

37 app.router.add_post('/candidate', handle_ice_candidate)

38

39 web.run_app(app, port=8443)

40Explanation of the Join Screen Process

- HTML Structure: The

index.htmlfile provides the basic structure of the join screen, controls, and participant view. Initially, only the join screen is visible. - Join Room Function: The

joinRoomfunction inwebrtc.jshandles the user input, hides the join screen, and shows the controls and participant view. It then callsstartWebRTCto initiate the WebRTC connection. - WebRTC Initialization: The

startWebRTCfunction sets up the RTCPeerConnection, handles ICE candidates, and adds the local media stream to the connection. It creates an offer, sends it to the server, and sets the remote description with the server's answer. - Backend Signaling: The server processes the offer and returns an answer, facilitating the WebRTC connection between peers. ICE candidates are exchanged to establish a reliable connection.

By implementing the join screen, you enable users to enter a room ID and initiate a WebRTC session, forming the foundation for real-time communication. In the next section, we will implement user controls for managing the media streams during the session.

Step 4: Implement Controls

In this section, we'll add functionality to control the media streams, such as muting/unmuting the microphone and turning the video on/off. These controls are essential for providing users with a better experience during their WebRTC communication sessions.

Control Implementation

Extend HTML for Controls

Ensure that your

index.html file has buttons for muting/unmuting the microphone and turning the video on/off:HTML

1 <!DOCTYPE html>

2 <html lang="en">

3 <head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Synapse WebRTC</title>

7 <style>

8 #join-screen {

9 display: block;

10 }

11 #controls, #participant-view {

12 display: none;

13 }

14 </style>

15 </head>

16 <body>

17 <div id="join-screen">

18 <h2>Join a Room</h2>

19 <input type="text" id="room-id" placeholder="Enter Room ID">

20 <button onclick="joinRoom()">Join</button>

21 </div>

22 <div id="controls">

23 <button onclick="toggleMute()">Mute/Unmute</button>

24 <button onclick="toggleVideo()">Video On/Off</button>

25 </div>

26 <div id="participant-view">

27 <!-- Video streams will be displayed here -->

28 </div>

29

30 <script src="webrtc.js"></script>

31 </body>

32 </html>

33JavaScript for Media Controls

Update your

webrtc.js file to include functions for muting/unmuting the microphone and turning the video on/off:JavaScript

1 let localStream;

2 let pc;

3

4 async function joinRoom() {

5 const roomId = document.getElementById('room-id').value;

6 if (!roomId) {

7 alert("Please enter a room ID");

8 return;

9 }

10

11 document.getElementById('join-screen').style.display = 'none';

12 document.getElementById('controls').style.display = 'block';

13 document.getElementById('participant-view').style.display = 'block';

14

15 await startWebRTC();

16 }

17

18 async function startWebRTC() {

19 pc = new RTCPeerConnection();

20

21 pc.onicecandidate = (event) => {

22 if (event.candidate) {

23 sendIceCandidate(event.candidate);

24 }

25 };

26

27 pc.ontrack = (event) => {

28 const videoElement = document.createElement('video');

29 videoElement.srcObject = event.streams[0];

30 videoElement.autoplay = true;

31 document.getElementById('participant-view').appendChild(videoElement);

32 };

33

34 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

35 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

36

37 const offer = await pc.createOffer();

38 await pc.setLocalDescription(offer);

39

40 const response = await fetch('/offer', {

41 method: 'POST',

42 headers: {

43 'Content-Type': 'application/json'

44 },

45 body: JSON.stringify({ sdp: pc.localDescription.sdp, type: pc.localDescription.type })

46 });

47

48 const answer = await response.json();

49 await pc.setRemoteDescription(new RTCSessionDescription(answer));

50 }

51

52 async function sendIceCandidate(candidate) {

53 await fetch('/candidate', {

54 method: 'POST',

55 headers: {

56 'Content-Type': 'application/json'

57 },

58 body: JSON.stringify(candidate)

59 });

60 }

61

62 function toggleMute() {

63 const audioTracks = localStream.getAudioTracks();

64 audioTracks[0].enabled = !audioTracks[0].enabled;

65 }

66

67 function toggleVideo() {

68 const videoTracks = localStream.getVideoTracks();

69 videoTracks[0].enabled = !videoTracks[0].enabled;

70 }

71Explanation of Control Functions

toggleMute Function

- This function toggles the enabled state of the audio track in the local media stream.

- When called, it accesses the audio tracks from the

localStream, and toggles theenabledproperty of the first audio track.

toggleVideo Function

- Similar to the

toggleMutefunction, this function toggles the enabled state of the video track in the local media stream. - It accesses the video tracks from the

localStream, and toggles theenabledproperty of the first video track.

Local Media Stream Management

- When the user joins a room, the

startWebRTCfunction requests access to the user's media devices and gets the local media stream. - The local media stream is then added to the RTCPeerConnection, allowing it to be sent to remote peers.

- The controls for muting and toggling video manipulate this local media stream directly.

By implementing these controls, you provide users with the ability to manage their audio and video streams during a WebRTC session, enhancing their experience and usability of the application. In the next section, we will focus on implementing the participant view, which will handle the display of media streams from all participants in the session.

Step 5: Implement Participant View

In this section, we will implement the participant view, which is responsible for displaying the media streams of all participants in the WebRTC session. This step is crucial for providing a comprehensive view of the communication session, allowing users to see and interact with each other.

Participant View Implementation

[a] Extend HTML for Participant View

Ensure your

index.html includes a container for the participant view:HTML

1 <!DOCTYPE html>

2 <html lang="en">

3 <head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Synapse WebRTC</title>

7 <style>

8 #join-screen {

9 display: block;

10 }

11 #controls, #participant-view {

12 display: none;

13 }

14 video {

15 width: 300px;

16 height: 200px;

17 margin: 10px;

18 border: 1px solid #ccc;

19 }

20 </style>

21 </head>

22 <body>

23 <div id="join-screen">

24 <h2>Join a Room</h2>

25 <input type="text" id="room-id" placeholder="Enter Room ID">

26 <button onclick="joinRoom()">Join</button>

27 </div>

28 <div id="controls">

29 <button onclick="toggleMute()">Mute/Unmute</button>

30 <button onclick="toggleVideo()">Video On/Off</button>

31 </div>

32 <div id="participant-view">

33 <!-- Video streams will be displayed here -->

34 </div>

35

36 <script src="webrtc.js"></script>

37 </body>

38 </html>

39[b] JavaScript for Handling Participant Streams

Update your

webrtc.js file to handle the display of remote participant streams:JavaScript

1 let localStream;

2 let pc;

3 const participantView = document.getElementById('participant-view');

4

5 async function joinRoom() {

6 const roomId = document.getElementById('room-id').value;

7 if (!roomId) {

8 alert("Please enter a room ID");

9 return;

10 }

11

12 document.getElementById('join-screen').style.display = 'none';

13 document.getElementById('controls').style.display = 'block';

14 participantView.style.display = 'block';

15

16 await startWebRTC();

17 }

18

19 async function startWebRTC() {

20 pc = new RTCPeerConnection();

21

22 pc.onicecandidate = (event) => {

23 if (event.candidate) {

24 sendIceCandidate(event.candidate);

25 }

26 };

27

28 pc.ontrack = (event) => {

29 const videoElement = document.createElement('video');

30 videoElement.srcObject = event.streams[0];

31 videoElement.autoplay = true;

32 participantView.appendChild(videoElement);

33 };

34

35 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

36 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

37

38 const offer = await pc.createOffer();

39 await pc.setLocalDescription(offer);

40

41 const response = await fetch('/offer', {

42 method: 'POST',

43 headers: {

44 'Content-Type': 'application/json'

45 },

46 body: JSON.stringify({ sdp: pc.localDescription.sdp, type: pc.localDescription.type })

47 });

48

49 const answer = await response.json();

50 await pc.setRemoteDescription(new RTCSessionDescription(answer));

51 }

52

53 async function sendIceCandidate(candidate) {

54 await fetch('/candidate', {

55 method: 'POST',

56 headers: {

57 'Content-Type': 'application/json'

58 },

59 body: JSON.stringify(candidate)

60 });

61 }

62

63 function toggleMute() {

64 const audioTracks = localStream.getAudioTracks();

65 audioTracks[0].enabled = !audioTracks[0].enabled;

66 }

67

68 function toggleVideo() {

69 const videoTracks = localStream.getVideoTracks();

70 videoTracks[0].enabled = !videoTracks[0].enabled;

71 }

72Explanation of the Participant View Process

HTML Structure: The

index.html file now includes a div with the id participant-view, which will hold video elements for each participant's media stream.JavaScript Functions:

- joinRoom Function: Handles the user input for joining a room and initializes the WebRTC connection.

- startWebRTC Function: Sets up the RTCPeerConnection, requests the local media stream, and handles the negotiation of the WebRTC connection.

- pc.ontrack Event: When a remote track is received, this event creates a new video element, sets its source to the received media stream, and appends it to the

participant-viewdiv. - sendIceCandidate Function: Sends ICE candidates to the server to help establish the WebRTC connection.

- toggleMute and toggleVideo Functions: Allow the user to mute/unmute their microphone and turn their video on/off.

By implementing the participant view, you provide a comprehensive interface for users to see and interact with all participants in the WebRTC session. This step completes the core functionality of your Synapse WebRTC application, enabling real-time, decentralized, and secure communication.

In the next section, we will focus on running your code, testing the application, and troubleshooting common issues to ensure everything works as expected.

Step 6: Run Your Code Now

With the implementation complete, it’s time to run your Synapse WebRTC application and ensure everything works as expected. This final step involves testing the application, troubleshooting any issues, and verifying that all components interact seamlessly.

Running the Application

Start the Synapse Server

- Ensure your Synapse server is configured correctly and all dependencies are installed.

- Run your

main.pyscript to start the Synapse server:

bash

1 python main.py

2You should see log messages indicating that the Synapse server has started and is listening for WebRTC signaling.

Open the Application

- Open your

index.htmlfile in a web browser. - You should see the join screen where you can enter a room ID.

Join a Room and Test

- Enter a room ID and click the "Join" button.

- The application should transition to the participant view, displaying your local video stream.

- Open the application in another browser or on another device, enter the same room ID, and join the room.

- Verify that both participants can see and hear each other.

Test Media Controls

- Use the "Mute/Unmute" and "Video On/Off" buttons to control your media streams.

- Ensure that the audio and video states toggle correctly and that changes are reflected in the participant view.

Troubleshooting Common Issues

No Video or Audio

- Ensure your browser has permission to access the camera and microphone.

- Check the console for any errors related to media device access.

Connection Issues

- Verify that the signaling server is running and accessible.

- Check the network settings and firewall rules to ensure that WebRTC signaling ports are open.

ICE Candidate Errors

- Ensure that ICE candidates are being exchanged correctly between peers.

- Check the console for any ICE candidate errors and troubleshoot accordingly.

General Debugging

- Use browser developer tools to inspect the console logs and network activity.

- Look for any errors or warnings that can provide clues to potential issues.

Conclusion

By following this guide, you have successfully built a Synapse WebRTC application that enables decentralized, secure, real-time communication. The integration of Synapse and WebRTC allows for robust and private interactions, making it a powerful solution for modern communication needs.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ