Introduction: Exploring OpenAI's Speech Capabilities

OpenAI has rapidly become a leader in artificial intelligence, and its speech capabilities are no exception. This guide offers a deep dive into OpenAI's suite of speech technologies, including Whisper, Voice Engine, and the Text-to-Speech (TTS) API. We'll explore their functionalities, applications, limitations, and ethical considerations.

What is OpenAI Speech?

OpenAI Speech encompasses a range of AI models and APIs designed to understand, generate, and manipulate spoken language. These tools enable developers to integrate sophisticated speech-based functionalities into their applications.

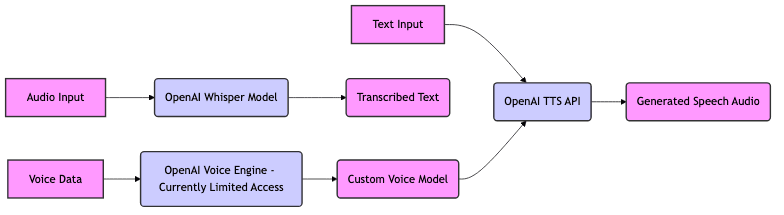

OpenAI's Speech Technology Ecosystem

OpenAI's speech ecosystem is built upon several key components:

- Whisper: A robust speech recognition model capable of transcribing audio into text, even in noisy environments.

- Voice Engine: A tool for creating custom voices, allowing for personalized and branded audio experiences. Its use has been paused due to security reasons.

- Text-to-Speech (TTS) API: Converts written text into natural-sounding speech, offering a variety of voices and customization options.

OpenAI's Whisper Model: Revolutionizing Speech Recognition

Whisper is a neural network trained on a massive dataset of audio and text, enabling it to perform robust speech recognition across multiple languages and accents. It's a powerful tool for transcription, translation, and other speech-related tasks.

Whisper's Architecture and Training

Whisper's architecture is based on a transformer model, which allows it to process long sequences of audio and capture complex relationships between sounds and words. It was trained on 680,000 hours of multilingual and multitask supervised data collected from the web. This vast training dataset makes Whisper remarkably accurate and versatile. The model is trained to perform tasks like multilingual speech recognition, speech translation, and language identification. The multi-tasking nature of the training allows for improved robustness and zero-shot capabilities. For example, it can transcribe audio even when the accent is not commonly found in datasets.

Whisper's Capabilities and Limitations

Capabilities:

- High accuracy in transcribing speech, even in noisy environments.

- Support for multiple languages.

- Ability to handle different accents and speaking styles.

- Can translate speech from one language to another.

Limitations:

- Can still struggle with highly specialized vocabulary or strong accents.

- May produce occasional errors, especially in long or complex audio recordings.

- Performance can be affected by background noise and audio quality.

python

1import whisper

2

3model = whisper.load_model("base") # You can choose different model sizes like tiny, base, small, medium, large

4

5# load audio and pad/trim it to fit 30 seconds like the models expect

6audio = whisper.load_audio("audio.mp3")

7audio = whisper.pad_or_trim(audio)

8

9# make log-Mel spectrogram and move to the same device as the model

10mel = whisper.log_mel_spectrogram(audio).to(model.device)

11

12# detect the spoken language\_, probs = model.detect_language(mel)

13print(f"Detected language: {max(probs, key=probs.get)}")

14

15# decode the audio

16options = whisper.DecodingOptions()

17result = whisper.decode(model, mel, options)

18

19# print the recognized text

20print(result.text)

21Real-world Applications of Whisper

Whisper has numerous real-world applications, including:

- Transcription services: Automatically transcribing meetings, lectures, and interviews.

- Voice assistants: Enhancing the accuracy of voice commands and responses.

- Accessibility: Providing real-time captions for videos and live events.

- Content creation: Generating subtitles for videos and podcasts.

- Medical transcription: Accurately converting doctor's notes to text.

OpenAI's Voice Engine: Creating Custom Voices

NOTE: At the time of writing, OpenAI has significantly limited access to the Voice Engine due to security and ethical concerns. This section describes its functionality but emphasizes the current restrictions.

Voice Engine aims to enable the creation of custom voices for various applications. While the technology shows significant promise, OpenAI is proceeding cautiously to address potential risks.

How Voice Engine Works

The Voice Engine uses a small audio sample to create a synthetic voice that mimics the speaker's tone, accent, and style. This process involves analyzing the audio sample and extracting key features that define the speaker's voice. These features are then used to train a model that can generate new speech in the same voice. The main usage of Voice Engine is paused due to security reasons.

Voice Engine's Ethical Considerations

One of the primary concerns surrounding Voice Engine is the potential for misuse. Creating synthetic voices that can impersonate real individuals raises significant ethical questions about consent, identity theft, and the spread of misinformation. OpenAI is actively researching and implementing safeguards to mitigate these risks, including watermarking and robust verification processes. Another main concern is the risk of fraud and deception, where bad actors could use synthesized voice to misrepresent or impersonate someone.

Case Studies: Successful Implementations of Voice Engine

Note: Given the current restrictions, these are conceptual case studies based on the intended functionality of the Voice Engine.

- Personalized Learning: Creating custom voices for educational apps that can adapt to a student's learning style.

- Accessibility: Providing individuals with speech impairments the ability to communicate using a voice that resembles their own.

- Content Creation: Allowing authors and narrators to create audiobooks with their own unique voices.

python

1# Conceptual Example (Not functional due to limited access)

2# Assumes an API endpoint for Voice Engine and necessary authentication

3

4# import requests

5

6# def generate_speech(text, voice_profile_id):

7# url = "https://api.openai.com/v1/voice_engine/generate"

8# headers = {

9# "Authorization": f"Bearer YOUR_API_KEY"

10# }

11# data = {

12# "text": text,

13# "voice_profile_id": voice_profile_id

14# }

15# response = requests.post(url, headers=headers, json=data)

16# if response.status_code == 200:

17# return response.content # Assuming the response is the audio file

18# else:

19# print(f"Error: {response.status_code} - {response.text}")

20# return None

21

22# # Example usage

23# audio_data = generate_speech("Hello, this is a custom voice.", "voice_profile_123")

24# if audio_data:

25# with open("output.wav", "wb") as f:

26# f.write(audio_data)

27# print("Speech generated and saved to output.wav")

28OpenAI's Text-to-Speech (TTS) API: Transforming Text into Speech

OpenAI's TTS API provides a simple and efficient way to convert text into natural-sounding speech. It offers a variety of voices and customization options, making it suitable for a wide range of applications. The API delivers high quality audio and is a very powerful tool for integrating human-like speech into applications.

Accessing and Using the OpenAI TTS API

To use the OpenAI TTS API, you'll need an OpenAI API key. Once you have your key, you can make API requests to generate speech from text.

curl

1curl https://api.openai.com/v1/audio/speech \

2 -H "Authorization: Bearer YOUR_API_KEY" \

3 -H "Content-Type: application/json" \

4 -d '{

5 "model": "tts-1",

6 "input": "The quick brown fox jumped over the lazy dog.",

7 "voice": "alloy"

8 }' \

9 --output speech.mp3

10python

1from openai import OpenAI

2

3client = OpenAI(api_key="YOUR_API_KEY")

4

5response = client.audio.speech.create(

6 model="tts-1",

7 voice="alloy",

8 input="Hello, this is a sample text for speech synthesis."

9)

10

11response.stream_to_file("output.mp3")

12Working Example

This project integrates VideoSDK, OpenAI Realtime APIs to create an AI Translator Agent. Below are the setup instructions.

<iframe

1src="https://www.youtube.com/embed/aKIkGsKc1fc?rel=0"

2style="

3 position: absolute;

4 top: 0;

5 left: 0;

6 width: 100%;

7 height: 100%;

8 border: 0;

9"

10allowfullscreen

11frameborder="0"</iframe> </div>

Available Voices and Customization Options

The OpenAI TTS API offers a selection of pre-defined voices, each with its unique tone and style. As of the last update, the avaialble models are tts-1 and tts-1-hd, with a selection of voices such as alloy, echo, fable, onyx, nova and shimmer. You can also adjust parameters such as speaking rate and pitch to further customize the generated speech.

Optimizing Audio Quality and Performance

To optimize audio quality and performance, consider the following:

- Use high-quality input text that is free of errors and grammatical mistakes.

- Experiment with different voices to find the one that best suits your application.

- Adjust the speaking rate and pitch to achieve the desired tone and style.

- Consider pre-processing the text to improve clarity and pronunciation.

Comparing OpenAI's Speech Technology to Competitors

OpenAI's speech technology faces competition from other major players in the cloud AI space. Here's a comparison of OpenAI's offering to those from Google and Amazon:

OpenAI vs. Google Cloud Speech-to-Text

- OpenAI Whisper: Known for its robustness and ability to handle noisy environments. Its open-source nature is also a large advantage for some applications.

- Google Cloud Speech-to-Text: Offers a wide range of features and customization options, including support for real-time transcription and speaker diarization. Google often has better integration with enterprise grade tools.

OpenAI vs. Amazon Transcribe

- OpenAI Whisper: Excels in general-purpose transcription and translation tasks. It can be run locally.

- Amazon Transcribe: Provides specialized features for transcribing audio in specific domains, such as healthcare and finance. Amazon transcribe has a good language model built in so it can often provide high-quality results for specific usages.

Key Differentiators and Choosing the Right Solution

The choice between OpenAI, Google Cloud, and Amazon depends on your specific needs and requirements. Consider the following factors:

- Accuracy: Evaluate the accuracy of each service on your specific audio data.

- Features: Determine which features are essential for your application, such as real-time transcription or speaker diarization.

- Pricing: Compare the pricing models of each service and choose the one that best fits your budget.

- Ease of Use: Evaluate the ease of integration and the availability of documentation and support.

The Future of OpenAI Speech: Trends and Predictions

The field of AI speech technology is rapidly evolving, and OpenAI is at the forefront of these advancements. Here are some trends and predictions for the future of OpenAI Speech:

Advancements in Natural Language Processing

Future developments in NLP will further enhance the accuracy and naturalness of speech recognition and synthesis. Improved models will be able to better understand the context and nuances of spoken language, leading to more accurate transcriptions and more natural-sounding synthesized voices. Large Language models (LLMs) will also be more integrated into speech recognition and synthesis, further improving quality.

Expanding Language Support and Accessibility

As AI models continue to train on more diverse data, support for more languages and dialects will become available. OpenAI can improve the model and extend to more languages and dialects, further improving it's reach and global impact. Improved speech recognition will also lead to increase accessibility.

Addressing Ethical Concerns and Promoting Responsible AI

As AI speech technology becomes more powerful, it's crucial to address ethical concerns and promote responsible AI development. OpenAI is committed to developing and deploying AI technologies in a way that is safe, fair, and beneficial to society. This includes implementing safeguards to prevent misuse and promoting transparency and accountability.

Conclusion: The Impact of OpenAI Speech on Various Industries

OpenAI Speech has the potential to revolutionize various industries, from healthcare and education to entertainment and customer service. Its ability to understand, generate, and manipulate spoken language opens up new possibilities for automation, personalization, and accessibility. As the technology continues to evolve, we can expect to see even more innovative applications emerge in the years to come.

OpenAI API Documentation

: "Learn more about the OpenAI API and its capabilities."OpenAI's Whisper Paper

: "Read the research paper detailing the Whisper model's architecture and performance."Responsible AI Development

: "Understand OpenAI's commitment to responsible AI development and deployment."

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ